At Meroxa, we recently adopted ArgoCD as our go-to continuous delivery (CD) tool, allowing us to easily leverage the GitOps framework to deploy Kubernetes resources and services. We utilize ArgoCD to simplify the management of tenant instances of our platform, deployed within a Kubernetes cluster. Before we expand further, here’s a quick overview of the technologies used in this blog post:

ArgoCD Setup at Meroxa

ArgoCD's primary role in our platform is to deploy and manage thousands of distinct, “mini” Conduit platform instances, which we call 'tenants'. This setup comprises of:

- Supervisor Application: This is a single ArgoCD application that creates and manages Meroxa tenants. This involves creation of Kubernetes namespaces and ArgoCD Applications for each tenant, supports deployment into private VPCs, and ensures cloud-agnostic deployments across AWS, Azure, or Google Cloud. It’s also capable of deploying the Conduit platform in air-gapped environments, as well as deploying tenants to different computer architectures.

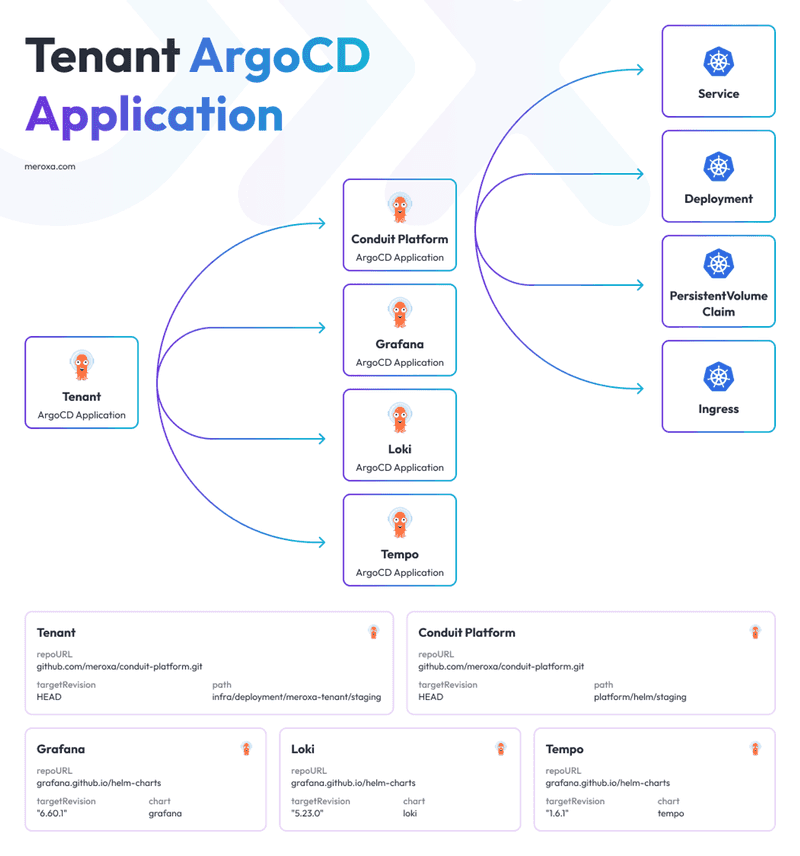

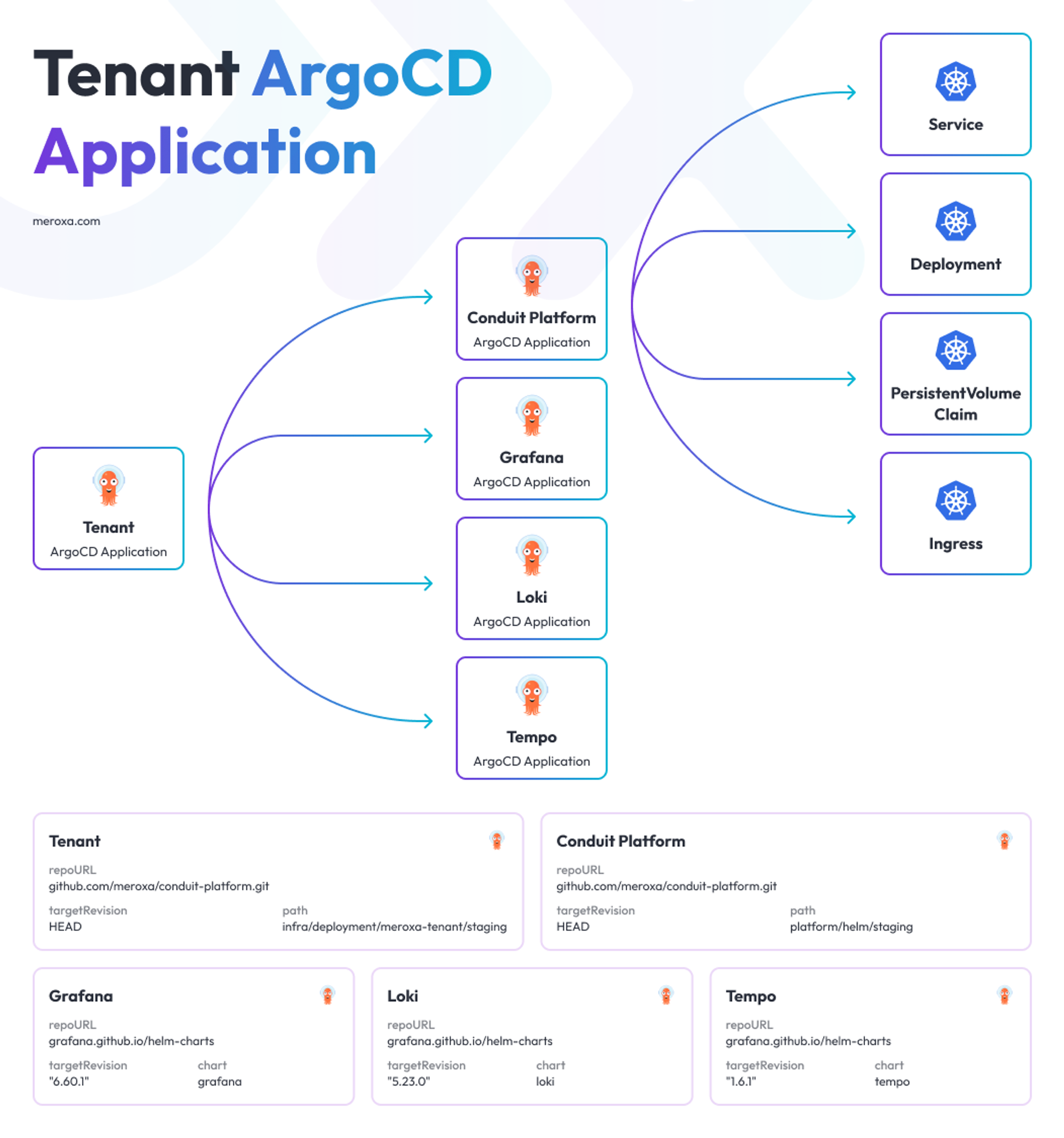

- Tenant ArgoCD Applications: For each tenant on the platform, an ArgoCD application is created. The tenant ArgoCD application points to a Helm chart containing all components of the tenant platform instance, including the Conduit platform, which performs Meroxa’s core stream processing. Additionally, it encapsulates ArgoCD Applications for supporting services in the tenant namespace, such as Grafana and Loki.

Diagram of a Tenant ArgoCD Application. Note that there is a hierarchy, where the Conduit Platform is a child App of the Tenant ArgoCD App.

This encapsulation provides us simplicity for managing all of these Kubernetes resources - Deployments, Pods, Ingress, etc. All the setup application needs to do is invoke the Kubernetes API once to create the Tenant ArgoCD Application, which is provided as a Kubernetes Custom Resource by ArgoCD.

The tenant ArgoCD Applications have the repo pointed to the GitHub repository hosting the Conduit Platform code. The path is pointed to a directory containing the helm chart for the specific environment - we’ll dig into that nuance further down below. Lastly, the targetRevision is pointed to HEAD, so we can ensure the helm chart from the latest commit will be reflected. ArgoCD will automatically sync.

Once the synchronization process runs, the ArgoCD controller will be responsible for reconciling the desired state from the helm chart into the actual state of Kubernetes resources - including the Conduit Platform’s Pod, Deployment, and Ingress. For more information on the sync process in ArgoCD, check out the documentation here.

PR → Staging → Production

Our deployment workflow is designed to keep the deployment process reliable and efficient. Here’s how we navigate from staging to production using ArgoCD:

Staging CI Workflow

The journey begins when a PR is merged into the main branch, triggering our staging CI GitHub workflow. This performs the following:

Example values-images.yaml file, containing a docker image tag for the conduit platform:

imageTag: av8344892b23h281h5c50e863a93c2b231hd8ce3Production CI Workflow

The production workflow mostly following the staging process, but with two key differences:

- Promote The Staging Chart + Docker Image: Instead of building a new image for production, the production workflow promotes the changes from the

staging/directory to theproduction/directory. - Manual PR Approval for Production: Unlike staging, the PR for production deployment requires manual approval. This step ensures an extra layer of scrutiny and control before changes impact our production environment.

gitGraph LR:

checkout main

commit

commit

branch samir/add-new-migration

commit id: "samir adds new migration"

checkout main

merge samir/add-new-migration id:"1j38h72"

branch automated-value-files-updates-1j38h72

commit id: "[Automated] update staging directory values files to '1j38h72'"

checkout main

merge automated-value-files-updates-1j38h72 id:"j81h78t"

branch automated-value-files-updates-prod-1j38h72

commit id: "[Automated] update production directory values files to '1j38h72'"

checkout main

merge automated-value-files-updates-prod-1j38h72Challenges and Learnings

Our introduction to ArgoCD was a bit of a learning curve. Here are some challenges we faced and the insights we gained:

- Managing Image Tags and PRs: Initially, pinning image tags with environment-specific deployments was tricky. We learned to simply point

targetRevisiontoHEAD, and duplicate charts per environment in different directories. Here were the alternatives we avoided: - At first, we were inclined to point

targetRevisions directly to specific commits, but quickly this proved to be problematic, as changes to helm charts and values could bypass staging and land directly into production. - We also explored tracking separate

stagingandproductionbranches, but decided against it to reduce complexity and potential conflicts. - Automated PR Management: Automated PRs, especially for production, can create a lot of noise and can quickly pile up if the team is not paying attention to them. This is a drawback of the approach - we decided it was worth the tradeoff for the deployment simplicity.

- File Protection: We implemented PR checks to protect

values-images.yamlfiles and helm charts in staging and production directories from manual alterations, for integrity of the deployment process.

Future Improvements

Looking forward, we aim to improvement our ArgoCD setup:

- Slack Notifications: To enhance our monitoring, we're considering integrating Slack notifications to alert the team when syncs in Staging and Production are complete.

- Multi-Cluster Capabilities: Utilizing ArgoCD's multi-cluster feature could be useful, especially for managing multiple production clusters in different regions.

- Scaling ArgoCD Controllers: To improve reliability, we’re working on scaling up the number of ArgoCD controllers, in case of pod failures. ArgoCD has been a game-changer for us at Meroxa. It has streamlined our deployment process, making it more efficient and scalable. We're excited to dive deeper into ArgoCD and fully leverage its capabilities. Have you thought about deploying applications with ArgoCD? Are you working on stream processing or data engineering problems? Join our community and chat with us.