Conduit Makes MongoDB CDC 52% Faster Than Kafka Connect

In head-to-head testing Conduit performed 52% faster than Kafka Connect in streaming data from MongoDB.

4 Apr 2025

In head-to-head testing Conduit performed 52% faster than Kafka Connect in streaming data from MongoDB.

4 Apr 2025

AI projects often fail due to data bottlenecks, infrastructure complexity, and governance challenges. This blog explores how Meroxa helps tech leaders overcome these issues with real-time data movement, automation, and security, ensuring scalable, high-performance AI deployments.

By Dion Keeton

20 Feb 2025

The latest Conduit 0.13 release brings significant upgrades, focusing on developer experience, automation, and performance optimization. Key highlights include automated documentation synchronization for connectors, a powerful new CLI for seamless pipeline and connector management, and 5x improvements in output processing speed, reducing latency and boosting efficiency. Notably, this version also deprecates the built-in UI, reinforcing Conduit’s commitment to a CLI-driven workflow. With expanded CLI capabilities and automated documentation tools, developers can now manage data pipelines more efficiently than ever. Upgrade today to leverage these new features and maximize your real-time data processing capabilities! 🚀

By Dion Keeton

7 Feb 2025

No More Stale Models: Master Continuous MLOps with Meroxa & Databricks Keep your machine learning models fresh and accurate with real-time, automated MLOps. This blog explores how Meroxa’s Conduit Platform and Databricks enable continuous model retraining**, eliminating stale predictions and manual updates. Learn how to streamline data movement, real-time feature engineering, and automated ML workflows for peak performance. Whether for fraud detection, predictive maintenance, or personalized AI, discover how to scale MLOps efficiently. Stay ahead with always-on machine learning—no more stale models!

5 Feb 2025

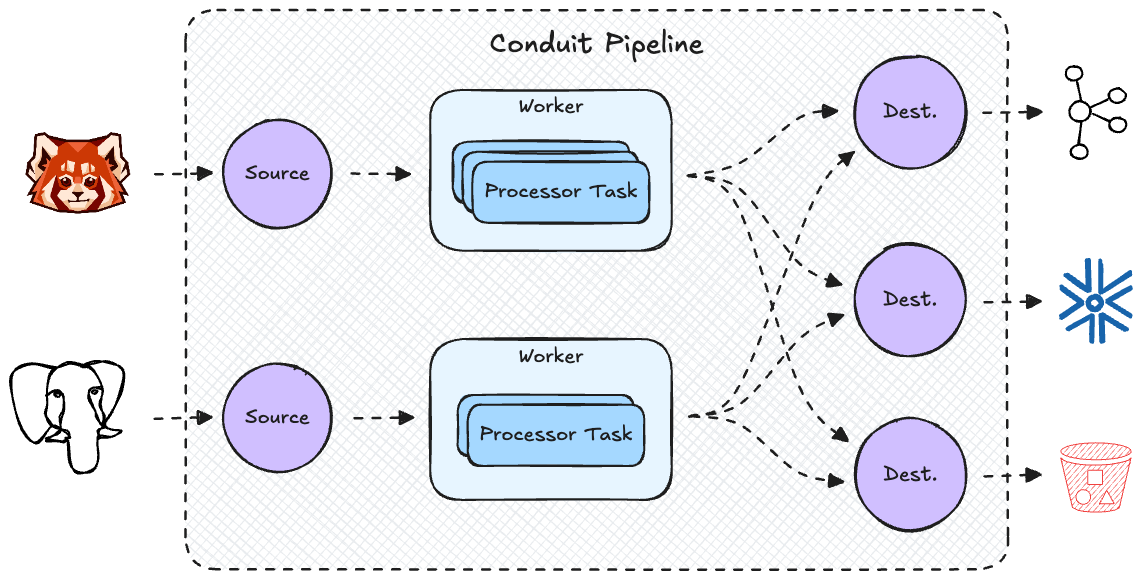

In this blog, we dive into how Conduit transitioned from a flexible but slower architecture to a high-performance streaming engine. We explore the limitations of the old DAG-based design, its impact on ordering guarantees and backpressure, and why switching to a Worker-Task Model drastically improved throughput and efficiency. With real-world performance benchmarks showing a 5x increase in message throughput, this blog is a must-read for developers and data engineers looking to optimize their data pipelines. Learn how Conduit is setting a new standard for real-time data movement and how you can leverage these improvements today! 🚀

By Lovro Mažgon

29 Jan 2025

Traditional data warehouses, long the cornerstone of analytics, are increasingly ill-equipped to meet the demands of today’s real-time, dynamic data needs. Enter the Lakehouse: an innovative architecture that blends the flexibility of data lakes with the robust querying capabilities of warehouses, seamlessly handling structured and unstructured data for real-time analytics. This blog explores the shortcomings of legacy systems, the transformative benefits of Lakehouses, and how Meroxa simplifies the journey with its intuitive tools, real-time data pipelines, and cloud-native scalability. From leveraging cutting-edge technologies like Apache Iceberg and Delta Lake to providing expert migration support, Meroxa empowers organizations to unlock faster, more flexible insights without the complexity or high costs of traditional solutions.

20 Dec 2024

We’re excited to introduce the latest update to the **Conduit Operator**, now with built-in **schema registry support**. This new feature allows seamless data encoding and decoding, improving data compatibility across your pipelines. Whether you're managing multiple Conduit instances or scaling your data operations, schema registry integration ensures a smoother, more reliable experience for handling complex data flows.

25 Oct 2024

ClickHouse has established itself as a prominent database for analytical applications due to its technical advantages over competitors like Druid, Pinot, and StarRocks.

By Tanveet Gill

26 Aug 2024

Ingest, transform & stream data from your Oracle Database with Meroxa in a few lines of code.

By Sara Menefee

15 Mar 2023

Real-time data sync, transformation & migration from PostgreSQL to MongoDB using Meroxa with Change Data Capture (CDC).

By Tanveet Gill

13 Dec 2022