As a CTO in 2025, you're facing a perfect storm of data challenges. Your board is asking about AI strategy, your teams are drowning in data silos, and everyone wants real-time insights yesterday. Meanwhile, you're trying to balance innovation with stability, cost with capability, and speed with security.

Let's cut through the noise and talk about what really matters in building a data architecture that won't be obsolete by the time you finish implementing it.

The Shifting Landscape

Remember when data architecture was simpler? When batch processing was enough, when "real-time" meant daily updates, and when AI was something you'd read about in research papers? Those days are gone, and they're not coming back.

Today's landscape demands architectures that can:

- Process data in genuine real-time (not "near" real-time)

- Support AI/ML workflows natively

- Scale elastically without breaking the bank

- Adapt to new data sources and types without requiring rebuilds

And tomorrow? The demands will only increase.

The Three Pillars of Future-Proof Architecture

After working with hundreds of CTOs and enterprise architects, we've identified three core principles that separate architectures that scale and adapt from those that become tomorrow's technical debt.

1. Real-Time First, Not Real-Time Later

Here's an uncomfortable truth: if you're not building for real-time data now, you're already behind. The "we'll add real-time later" approach is the technical equivalent of planning to dig a basement after building your house.

Real-time isn't just about speed – it's about architectural flexibility. When your foundation supports real-time data flows, batch processing becomes just a special case of your real-time capabilities, not the other way around.

What this means in practice:

- Change Data Capture (CDC) should be your default approach, not an afterthought

- Your data pipeline should handle streaming data natively

- Event-driven architectures should be your foundation, not an add-on

- Latency should be measured in milliseconds, not minutes

2. Decoupled by Design

The most future-proof architectures are those that allow components to evolve independently. Think LEGO blocks, not concrete monuments.

This means:

- Embracing event-driven architectures that naturally decouple producers from consumers

- Using standardized data contracts between systems

- Implementing async workflows by default

- Treating data platforms as products, not projects

The goal isn't just flexibility – it's survival. When (not if) you need to swap out components or add new capabilities, a decoupled architecture lets you evolve without revolution.

3. Data as a Product, Not a Byproduct

Stop treating data as something that just happens. In a future-proof architecture, data is a first-class product with:

- Clear ownership and governance

- Defined SLAs and quality metrics

- Versioning and lifecycle management

- Self-service access with proper controls

This shift in mindset changes everything from how you structure teams to how you build pipelines.

The Technical Stack That Makes It Possible

Let's examine how data architectures need to evolve to meet future demands. First, let's look at what many organizations have today versus where they need to go.

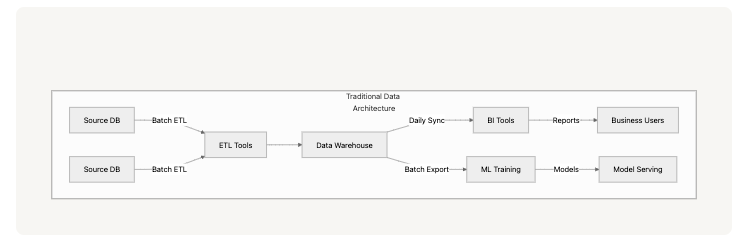

Traditional Architecture: The Legacy Approach

In traditional data architectures, we typically see:

- Batch ETL Processing

- Scheduled jobs pulling data from source systems

- Complex ETL tools managing transformations

- Heavy reliance on data warehouses

- Delayed insights and high latency

- Siloed ML Operations

- Separate pipelines for ML training

- Batch-oriented feature engineering

- Limited real-time inference capabilities

- Disconnected model serving

- Limited Real-time Capabilities

- "Near real-time" through micro-batching

- Multiple data copies across systems

- Point-to-point integrations

- High maintenance overhead

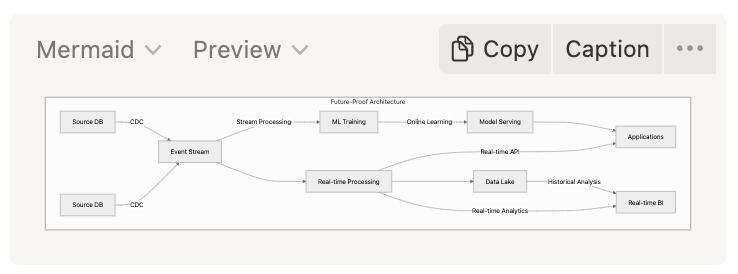

Future-Proof Architecture: The Modern Approach

The future-proof architecture fundamentally shifts how data flows through your organization:

- The Foundation Layer

- CDC captures changes instantly from source systems

- Event streaming backbone (like Kafka) provides real-time data highways

- Unified processing engine handles both streaming and batch

- Everything is real-time first, batch when needed

- The Processing Layer

- Stream processing enables instant transformations

- SQL and programmatic transformations coexist

- ML models serve and train on live data

- Automatic scaling based on actual load

- The Serving Layer

- Multiple serving patterns (real-time, batch, hybrid)

- Flexible consumption patterns (push, pull, subscribe)

- Universal data format support

- Granular controls and monitoring

Key Architectural Differences

The shift from traditional to future-proof architecture brings several critical improvements:

- Data Freshness

- Traditional: Hours or days old

- Future-proof: Real-time or near-instantaneous

- Scaling Approach

- Traditional: Vertical scaling with fixed resources

- Future-proof: Horizontal scaling with elastic resources

- Integration Pattern

- Traditional: Point-to-point connections

- Future-proof: Event-driven backbone

- ML/AI Support

- Traditional: Separate batch pipelines

- Future-proof: Integrated real-time feature engineering

Implementation Using Meroxa

Here's how Meroxa implements these architectural principles:

- Source Integration

- Native CDC connectors for all major databases

- Zero-impact change capture

- Automatic schema evolution handling

- Real-time Processing

- Instant data transformations

- Built-in stream processing

- Scalable event routing

- Destination Support

- Multiple output formats and protocols

- Real-time API endpoints

- Flexible consumption patterns

Making It Real: The Implementation Roadmap

Here's how to move from theory to practice:

Phase 1: Foundation Setting (3-6 months)

Start with a single high-value data flow:

- Implement CDC on your most critical data sources

- Set up your real-time streaming backbone

- Build your first real-time pipelines

- Establish monitoring and observability

Phase 2: Scaling Out (6-12 months)

Expand your real-time capabilities:

- Add more data sources and types

- Implement self-service capabilities

- Build out your data product framework

- Establish governance patterns

Phase 3: Innovation Enablement (Ongoing)

Now you can focus on value creation:

- Enable ML/AI workflows

- Implement advanced analytics

- Build real-time features

- Enable new business capabilities

The Role of Modern Platforms

This is where platforms like Meroxa come in. We're not just another tool in your stack – we're the foundation that makes this architecture possible without requiring an army of specialists.

With Meroxa, you get:

- Native CDC capabilities that just work

- Real-time processing without the complexity

- Built-in governance and monitoring

- Enterprise-grade security and reliability

The Cost of Waiting

Every day you delay moving to a real-time, future-proof architecture is:

- Another day of accumulated technical debt

- Another missed opportunity for real-time insights

- Another competitor potentially pulling ahead

- Another AI use case you can't support

Your Next Steps

- Assess your current architecture's real-time capabilities

- Identify your highest-value real-time use cases

- Start small but think big – pick a pilot project

- Partner with platforms that support your vision

Looking Ahead

The future of data architecture isn't about bigger batch jobs or more complex ETL pipelines. It's about real-time, adaptable, and intelligent systems that can evolve as your needs change.

The question isn't whether to make this shift – it's how quickly you can make it happen.

Ready to future-proof your data architecture? Let's talk about how Meroxa can help you build a foundation for real-time success. Schedule a conversation with our solutions architects today.