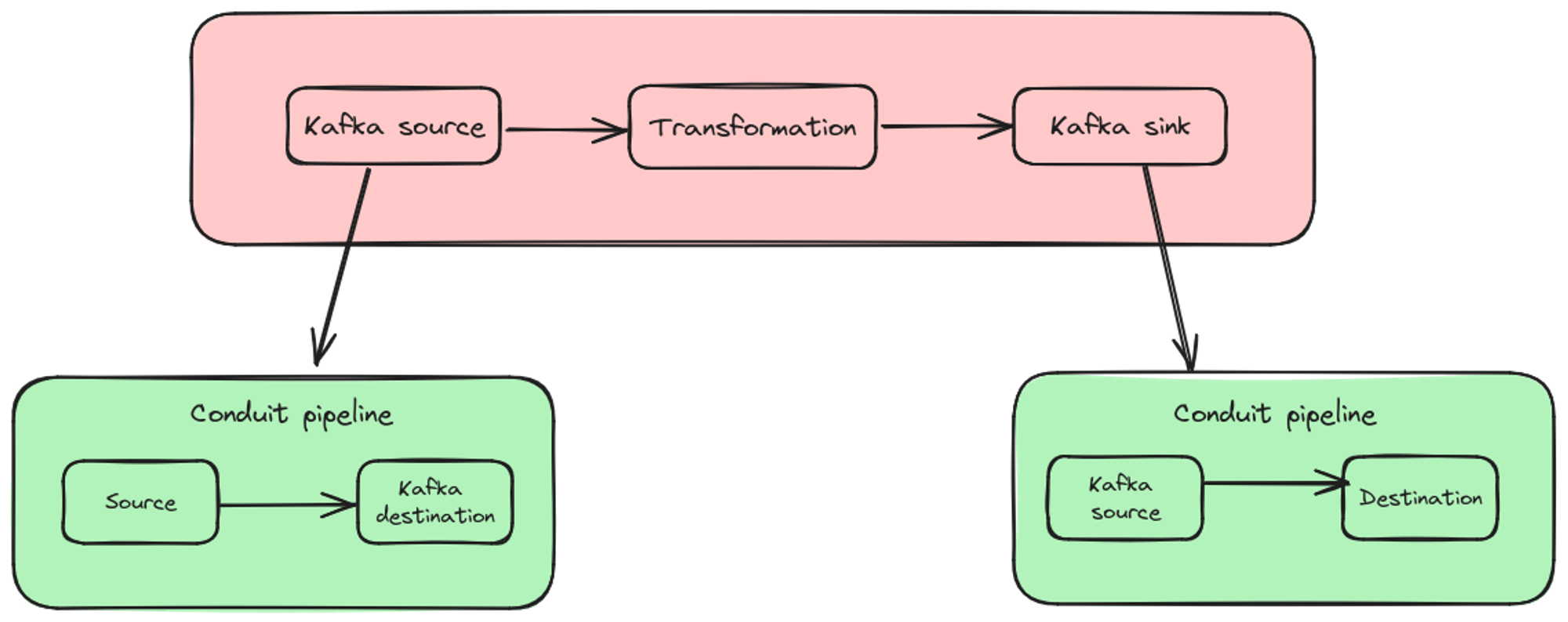

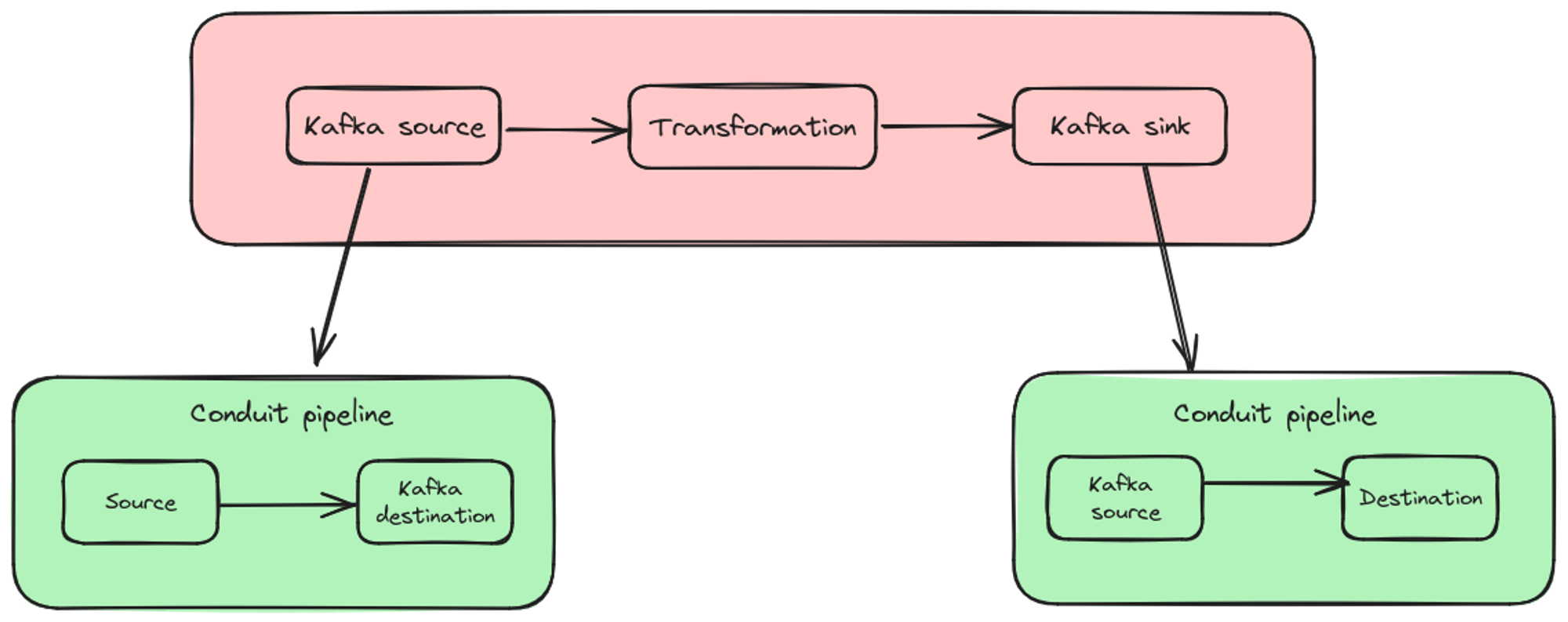

We are thrilled to introduce our latest offering, the Conduit Platform, which brings a host of new features and improvements designed to enhance your real-time data streaming experience, now powered by our robust Conduit open-source core. This transformation brings enhanced performance, scalability, and usability, coupled with access to over 100 connectors maintained by our dedicated open-source community. Here’s a closer look at what’s new and how it can benefit your data operations.

Conduit connector for Apache Flink, a powerful combination that significantly expands Flink’s capabilities. Apache Flink is renowned for its robust stream processing capabilities, while Conduit offers a lightweight and fast data streaming solution, simplifying the creation of connectors.

Explore the power of Conduit to create custom connectors tailored to your specific data integration needs. Learn how to use the Conduit SDK for enhanced data management and discover a world of possibilities in streamlining your data workflows. Start building your custom connector today!

Explore the new features and enhancements in Conduit version 0.10, designed to streamline your data integration processes. Discover how our latest update can help improve efficiency, security, and performance for your data operations. Upgrade today and transform how you manage data with Conduit 0.10

Discover the excitement of Hackweek! Dive into our latest blog post to explore innovative projects and creative breakthroughs from our most recent Hackweek. Learn how teams collaborate to turn bold ideas into reality, fostering a culture of innovation. Perfect for tech enthusiasts and creative thinkers alike!

Explore the capabilities of Meroxa's Conduit HTTP Connector, a robust tool designed to enhance data integration by facilitating seamless communication with any API endpoint. Perfect for developers and enterprises looking to streamline data workflows and maximize connectivity. Discover how our HTTP Connector can transform your data management strategy.

Learn how OpenAI's GPT-4 has helped to streamline data connector building for Meroxa, reducing development time and effort.

The Spire Maritime AIS source data integration is the first of its kind. It works natively with Meroxa's stream processing data platform.

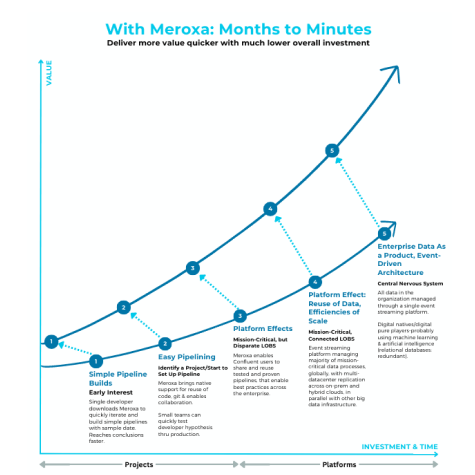

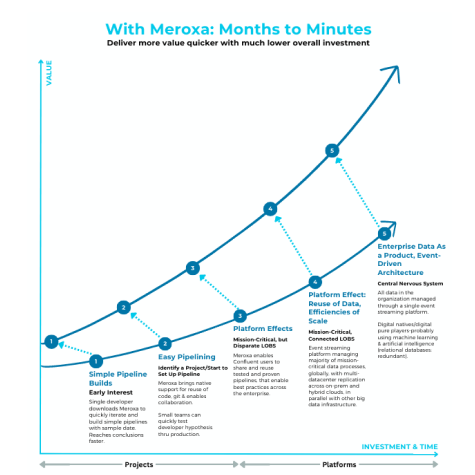

Meroxa enables big data projects to evolve with business needs and address data volatility challenges.

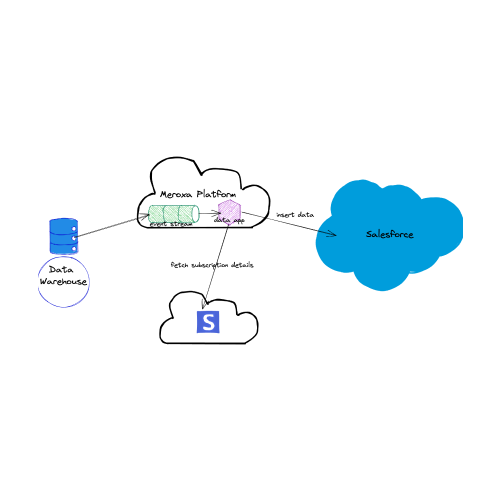

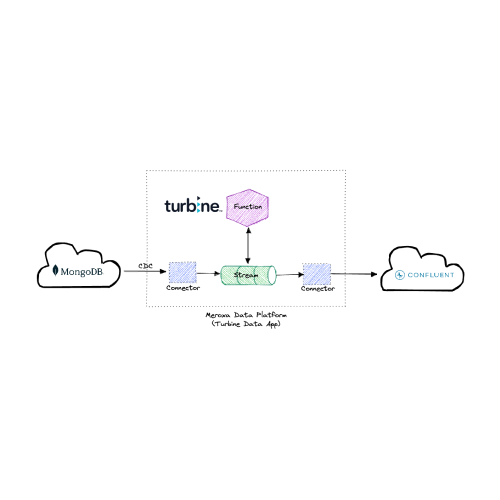

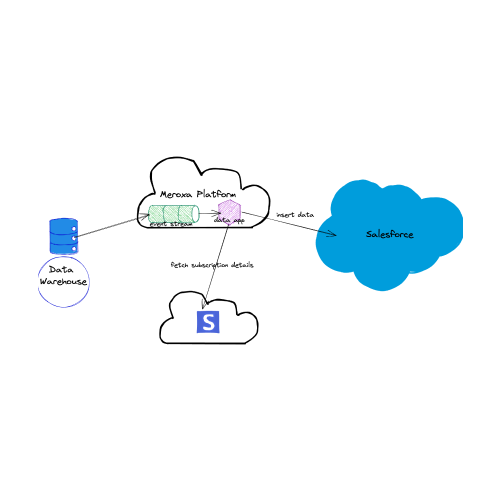

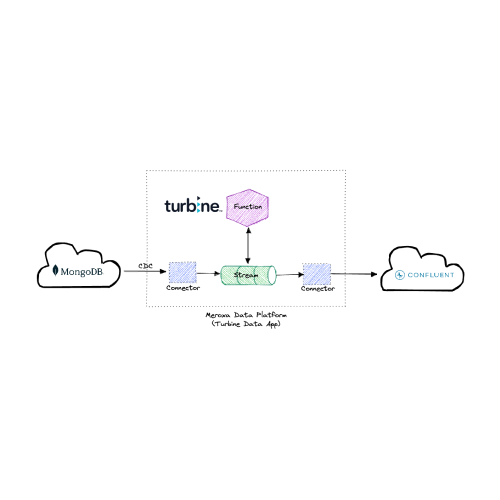

Learn how Meroxa's data platform can improve your time to value and enhance your experience when working with Confluent.

Meroxa helps you liberate your company's data so you can avoid vendor lock-in.

Because of Meroxa's revolutionary code-first strategy, Meroxa is the only independent streaming integration provider that frees you from vendor lock-in.

Ingest, transform & stream data from your Oracle Database with Meroxa in a few lines of code.

Learn how to implement the Medallion Architecture using Meroxa to streamline analytics and make it easier to work with large amounts of data.

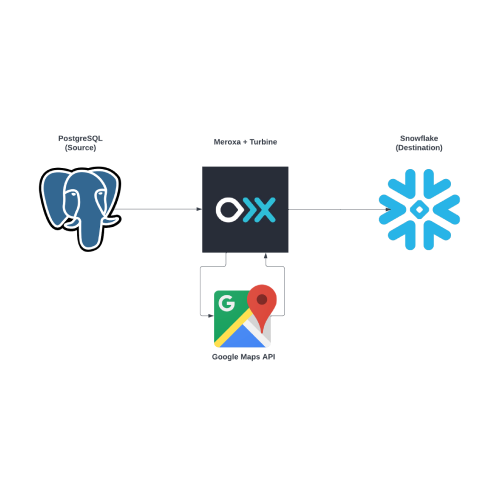

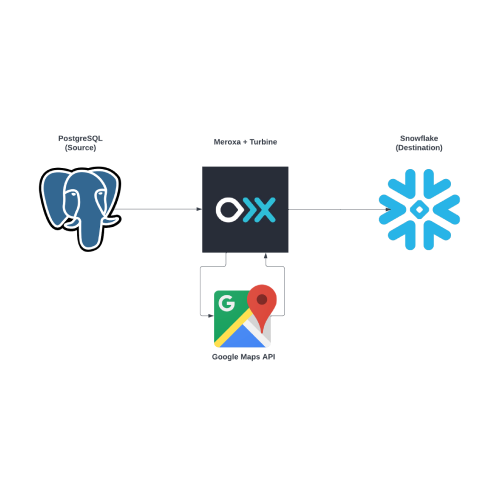

Learn how to use the Meroxa Platform along with the Turbine Framework to transform, enrich, orchestrate, & analyze data in real-time.

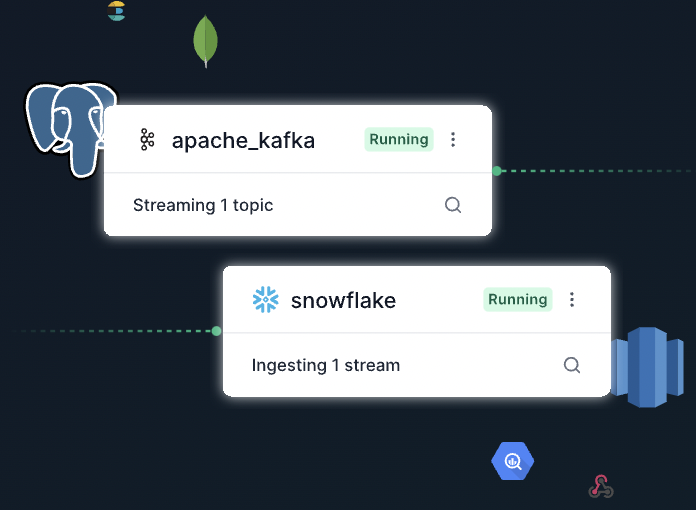

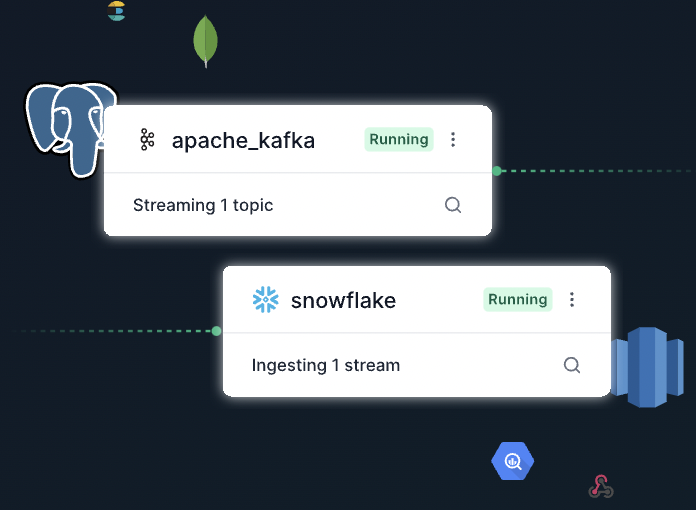

With Meroxa, companies optimize Snowflake storage, compute, operational costs and make their developers much more productive.

Meroxa saved money by replacing Workato with a Turbine data app which allows you to quickly sync, persist, and transform data between data infrastructures.

Now Ruby developers can stream Meroxa on Ruby. Meroxa is stream processing application PaaS.

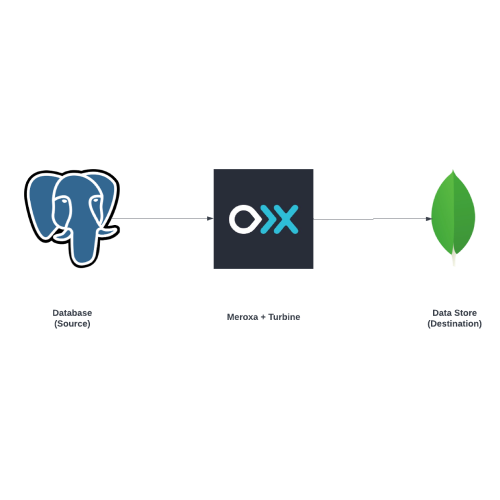

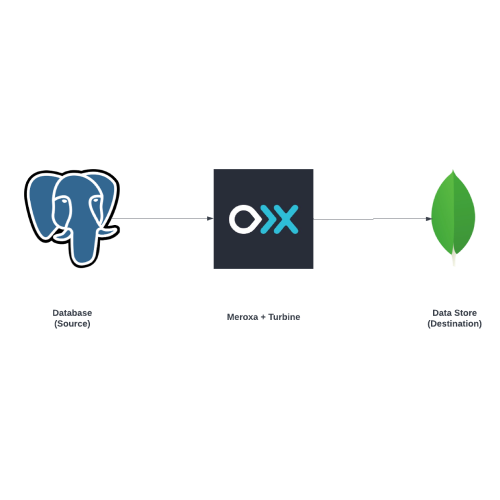

Real-time data sync, transformation & migration from PostgreSQL to MongoDB using Meroxa with Change Data Capture (CDC).

Visualizing the Turbine data app provides insight into the runtime details of the application’s components on the platform.

Learn how to efficiently pull data out of MongoDB in real-time using a Meroxa Turbine data stream processing app.

Combining Ruby’s simplicity and power with Turbine, Rubyists can now build data streaming apps with development workflows you know and love.

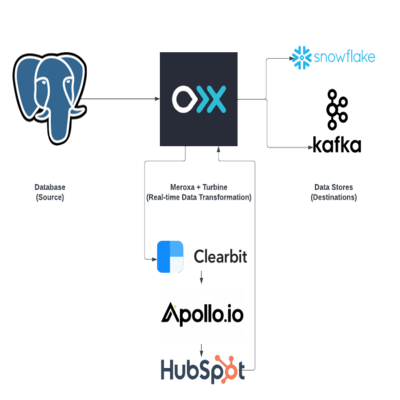

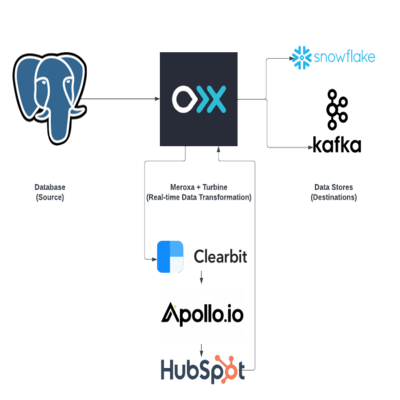

Use Meroxa Turbine to call multiple APIs in real-time to transform & enrich your data via Clearbit, Apollo and Hubspot

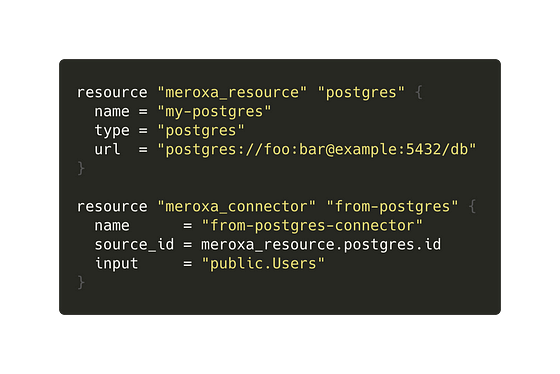

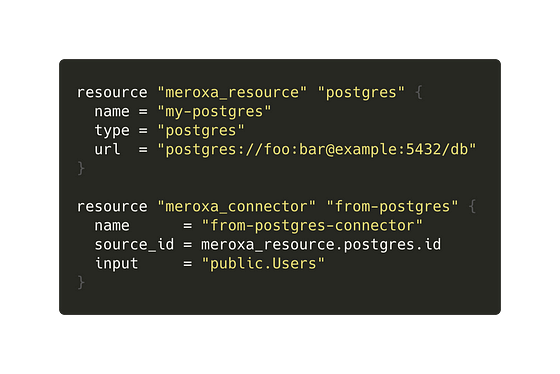

Stream data from PostgreSQL to Apache Kafka using four lines of code with change data capture.

With Meroxa’s newest feature, developers can now easily invite their teammates to their account to share resources and build data applications together.

Building and maintaining a CLI is a daunting task if you don’t have some guidance along the way. We identified some best practices to help you.

Bringing continuous delivery to Kafka and streaming data apps with Apache Kafka Connector and Feature Branch Deploys.

We are taking an important step towards helping customers build data applications with support for Apache Kafka as a resource on Meroxa.

Data apps may undergo several code changes during the development lifecycle. Feature branches allow developers to branch off the main or production instance of the data app code without impacting production code.

Real-Time Analytics Using the Kappa Architecture in ~20 Lines of Code with Turbine, Materialize, Spark, & S3.

What if you could easily access and use categorical data to detect dangerous anomalies? With Turbine and thatDot Novelty Detector, you can.

By using Meroxa’s Turbine SDK, you can simplify the data activation process by reducing the need to use multiple point solutions for transformation and reverse ETL with code.

A data app is an application that uses real-time or near-real-time events to solve a problem.

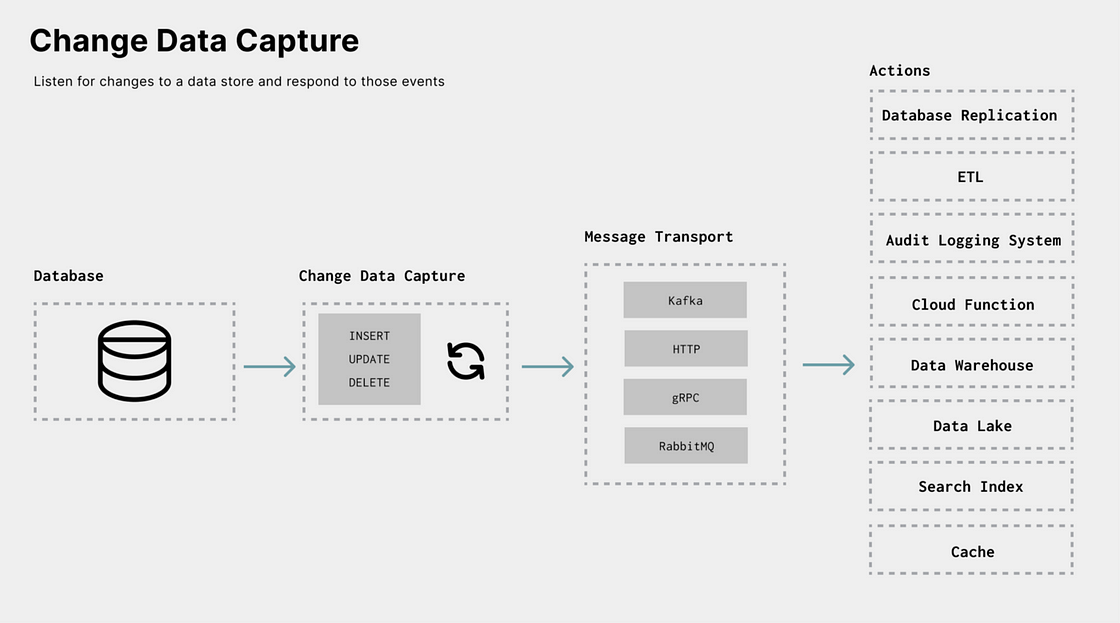

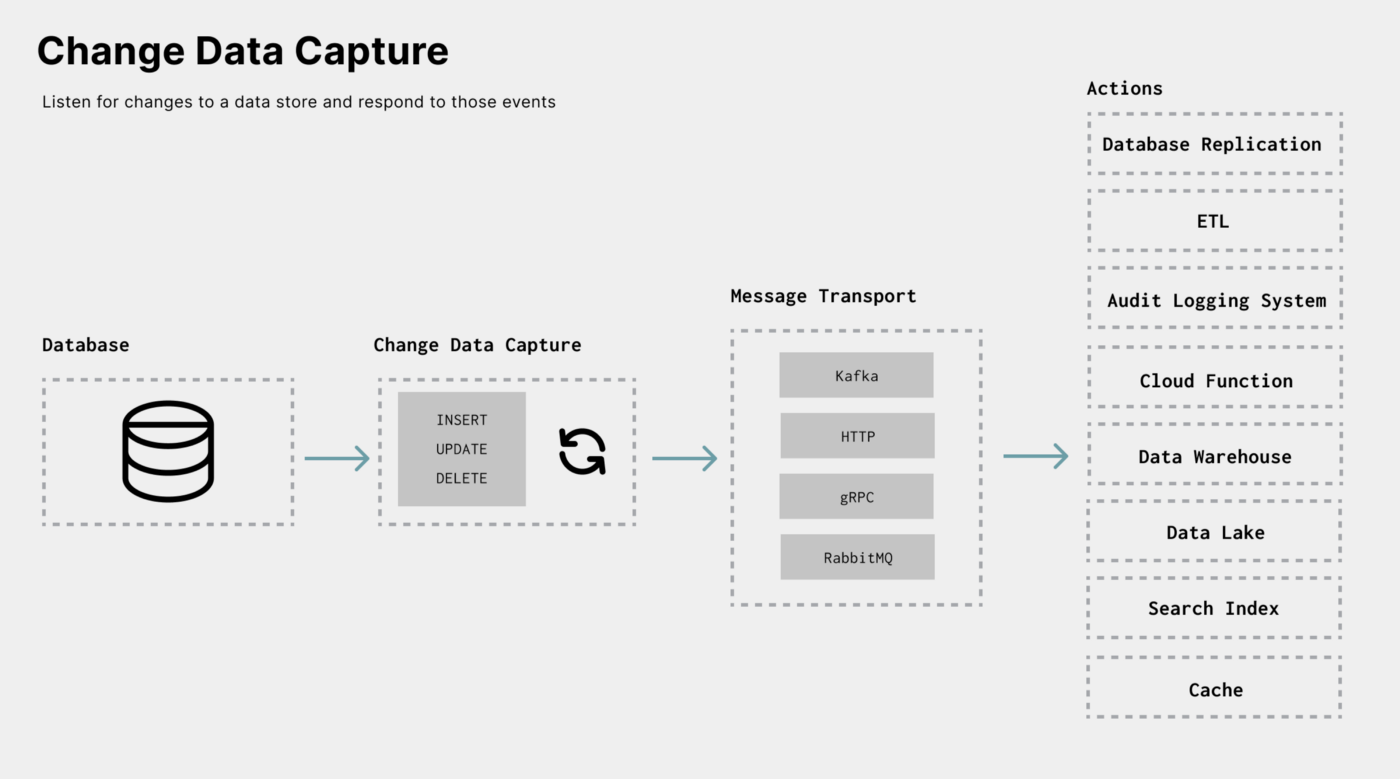

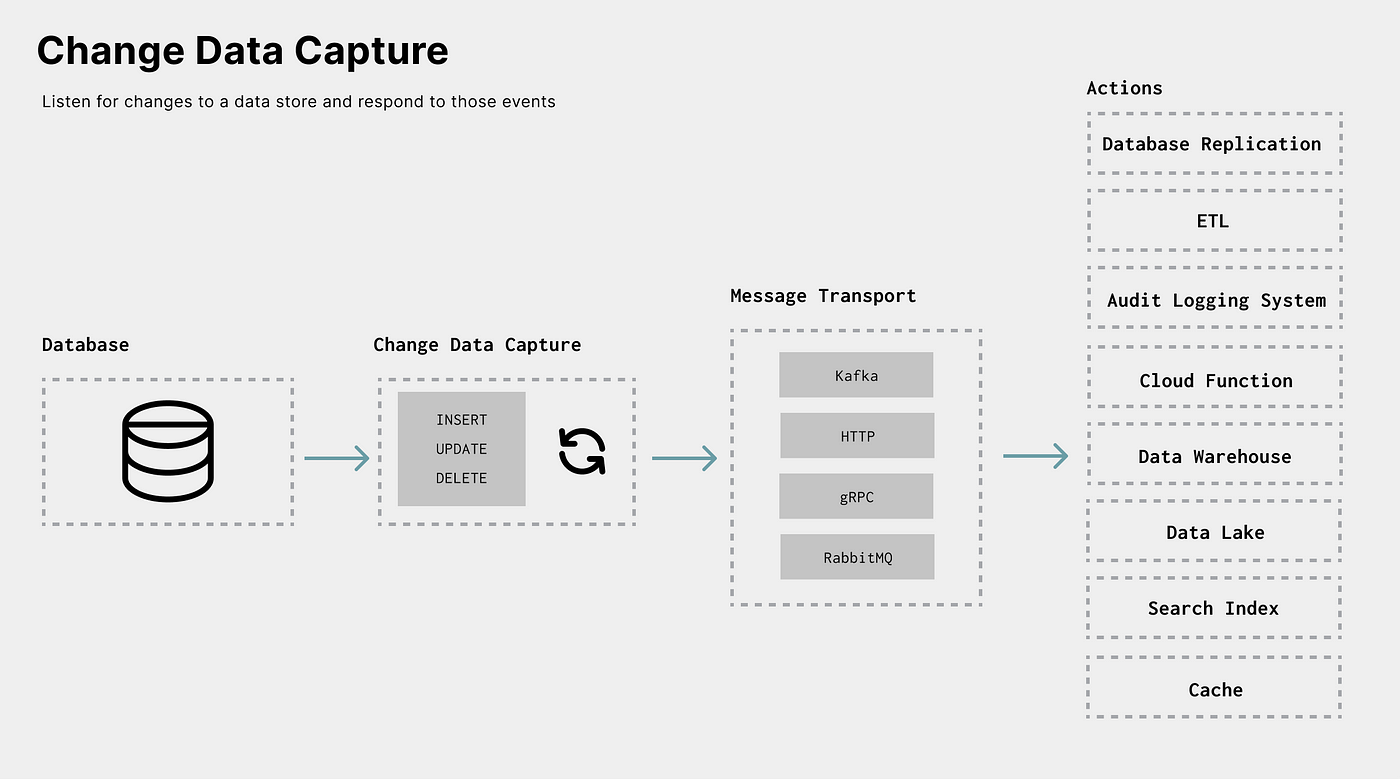

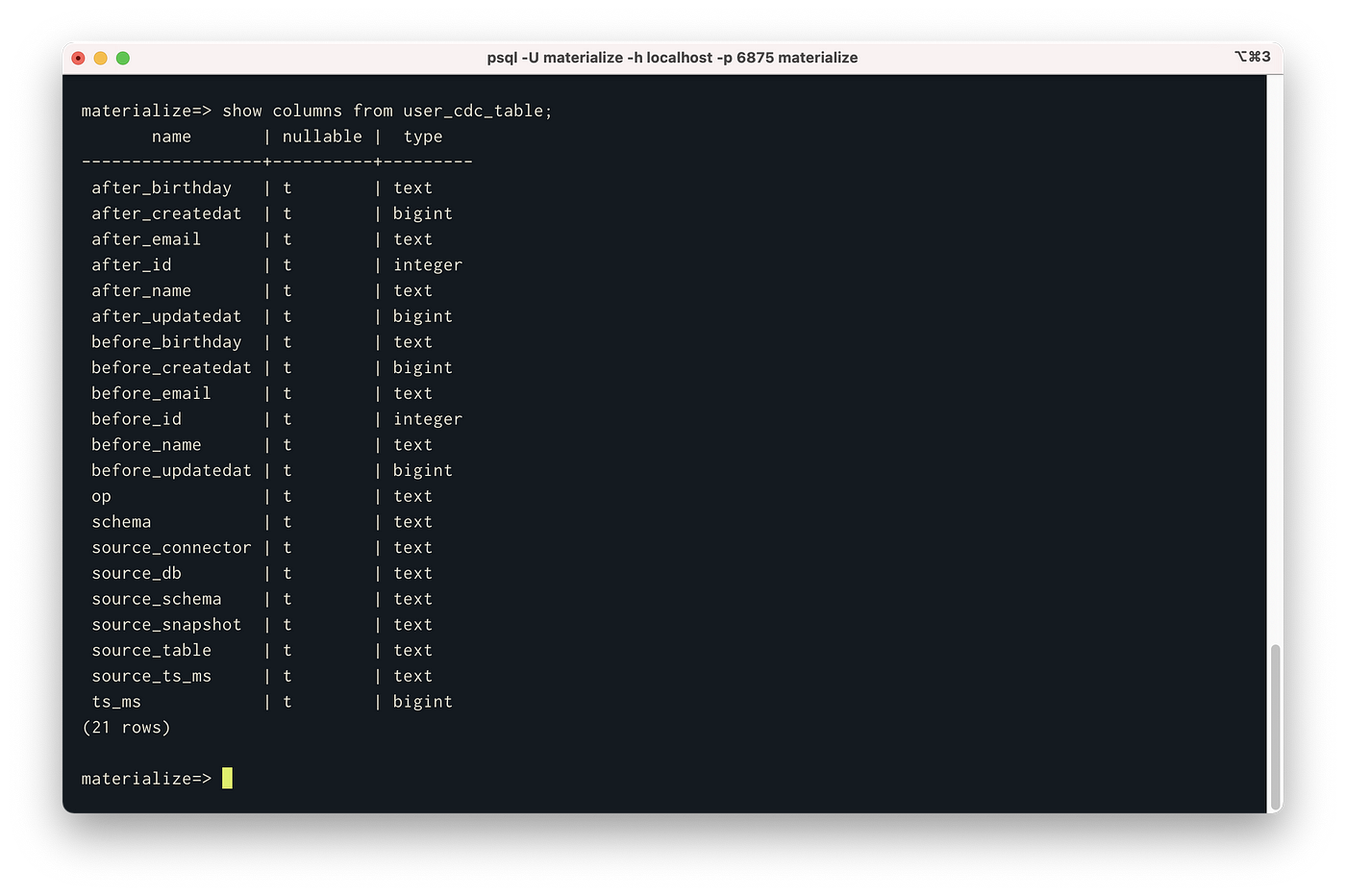

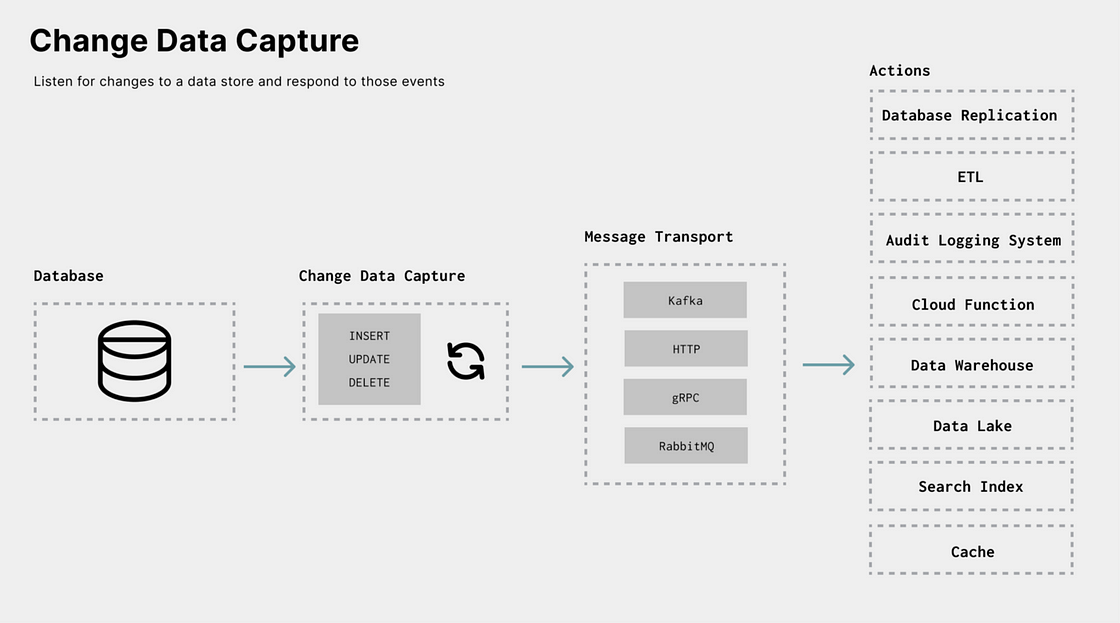

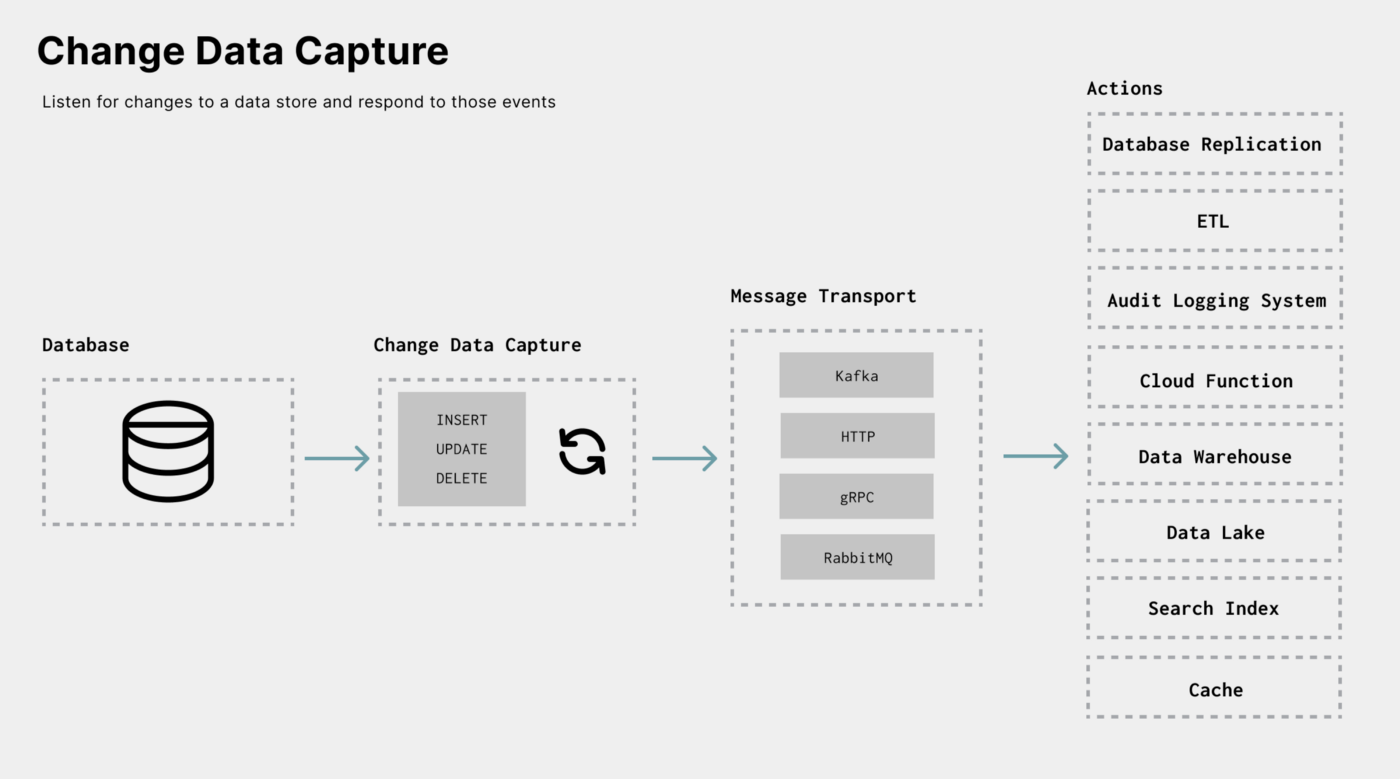

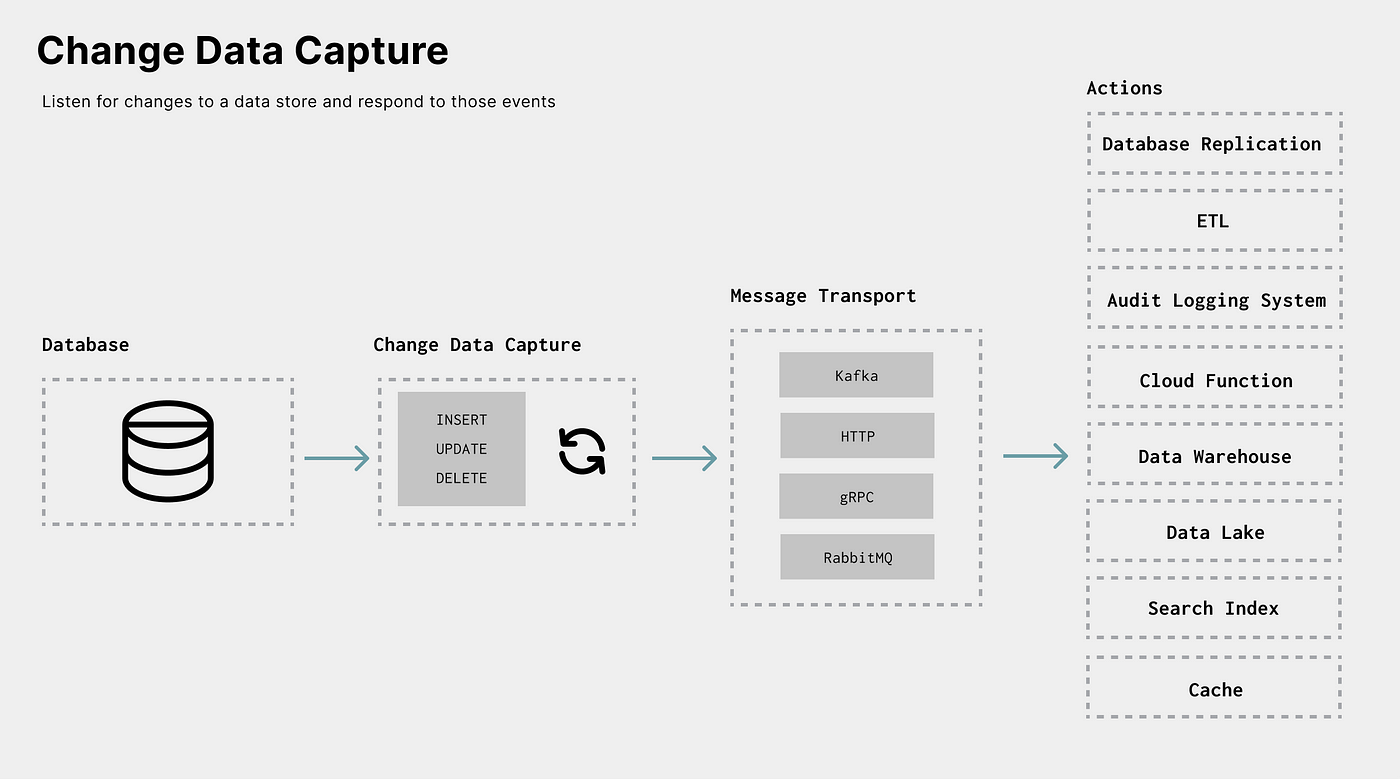

Change Data Capture (CDC) is a general term for a mechanism that communicates not just the current state of some data in an upstream resource.

Since launching last April, Meroxa has become the de-facto platform for creating real-time data pipelines for over 300 companies.

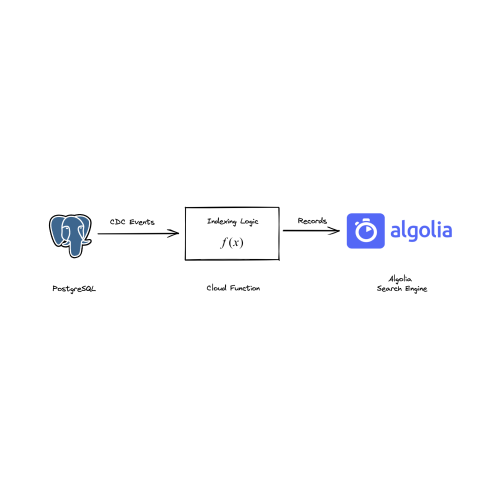

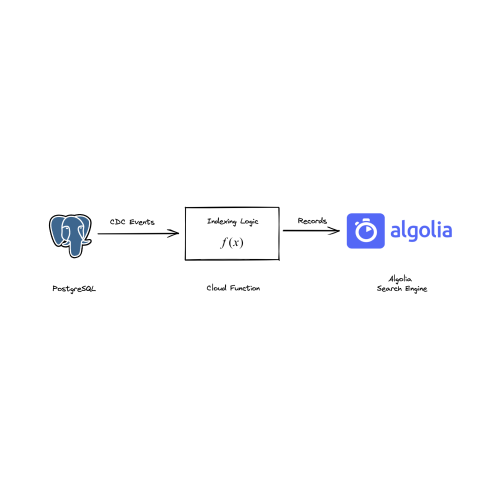

Learn how to send and continuously sync data to Algolia using Turbine. With Turbine, you can properly test, review, and build data integrations in a code-first way.

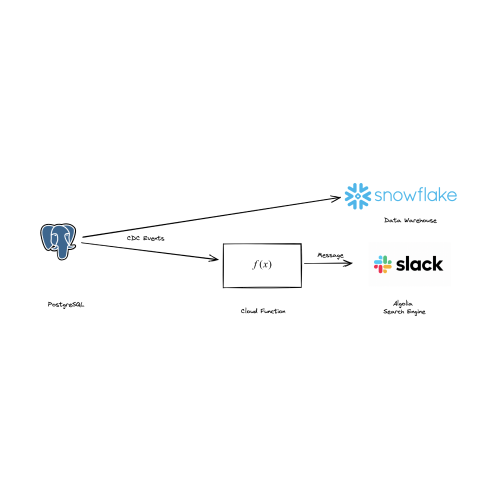

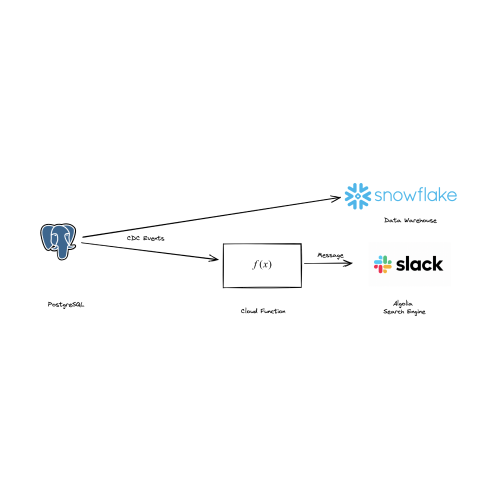

Use Turbine, Meroxa's stream processing application framework, to perform real-time e-commerce Order Data warehousing and alerting.

We’re excited to share the next chapter of Meroxa and what it means for software engineers to build, test and deploy data applications.

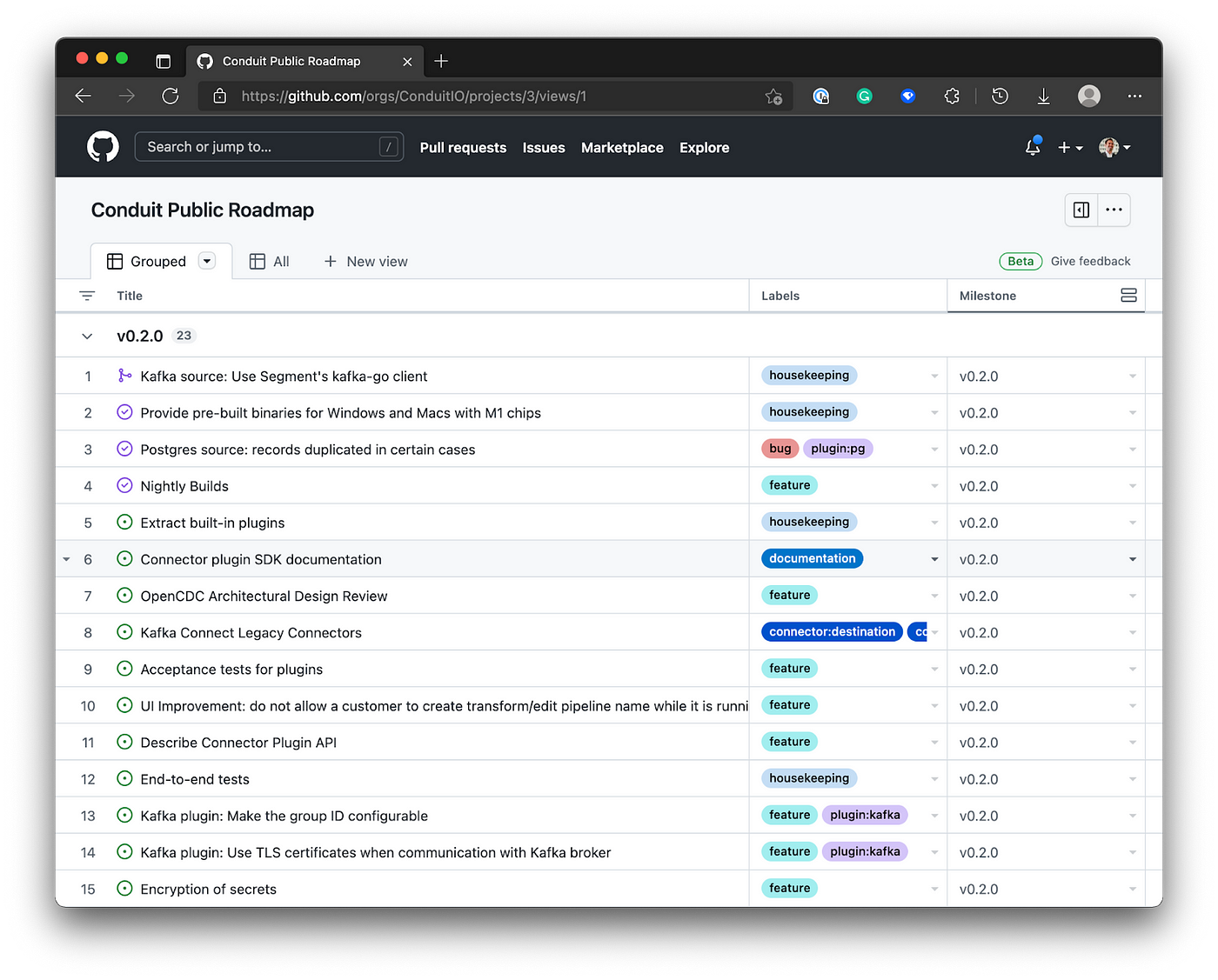

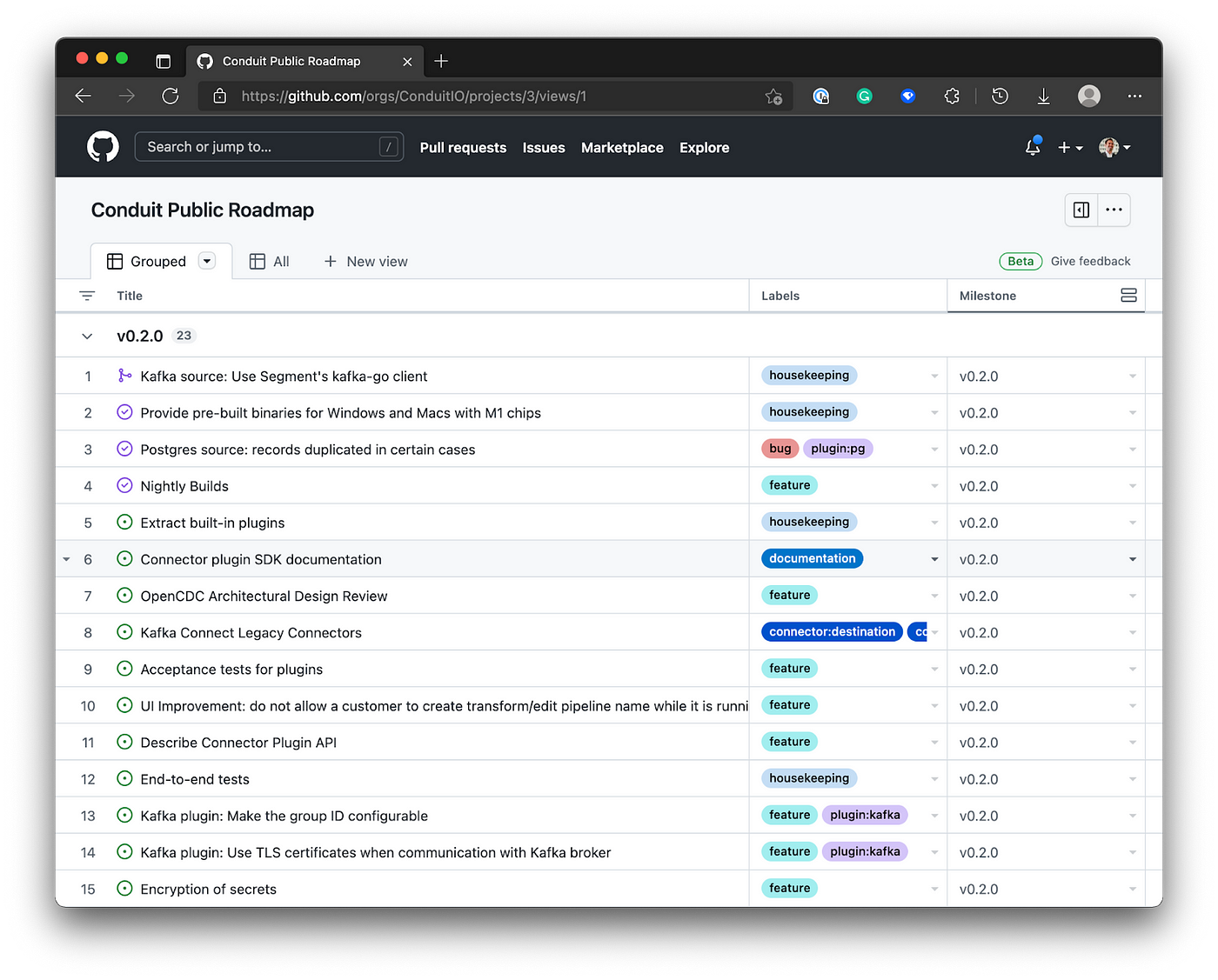

The Conduit roadmap is meant to provide insight into the major bodies of work we want to achieve within any given release.

The world is trending towards more rapid delivery of goods and services. We use the term “Real-time” to mean that it happens as close to “now” as possible.

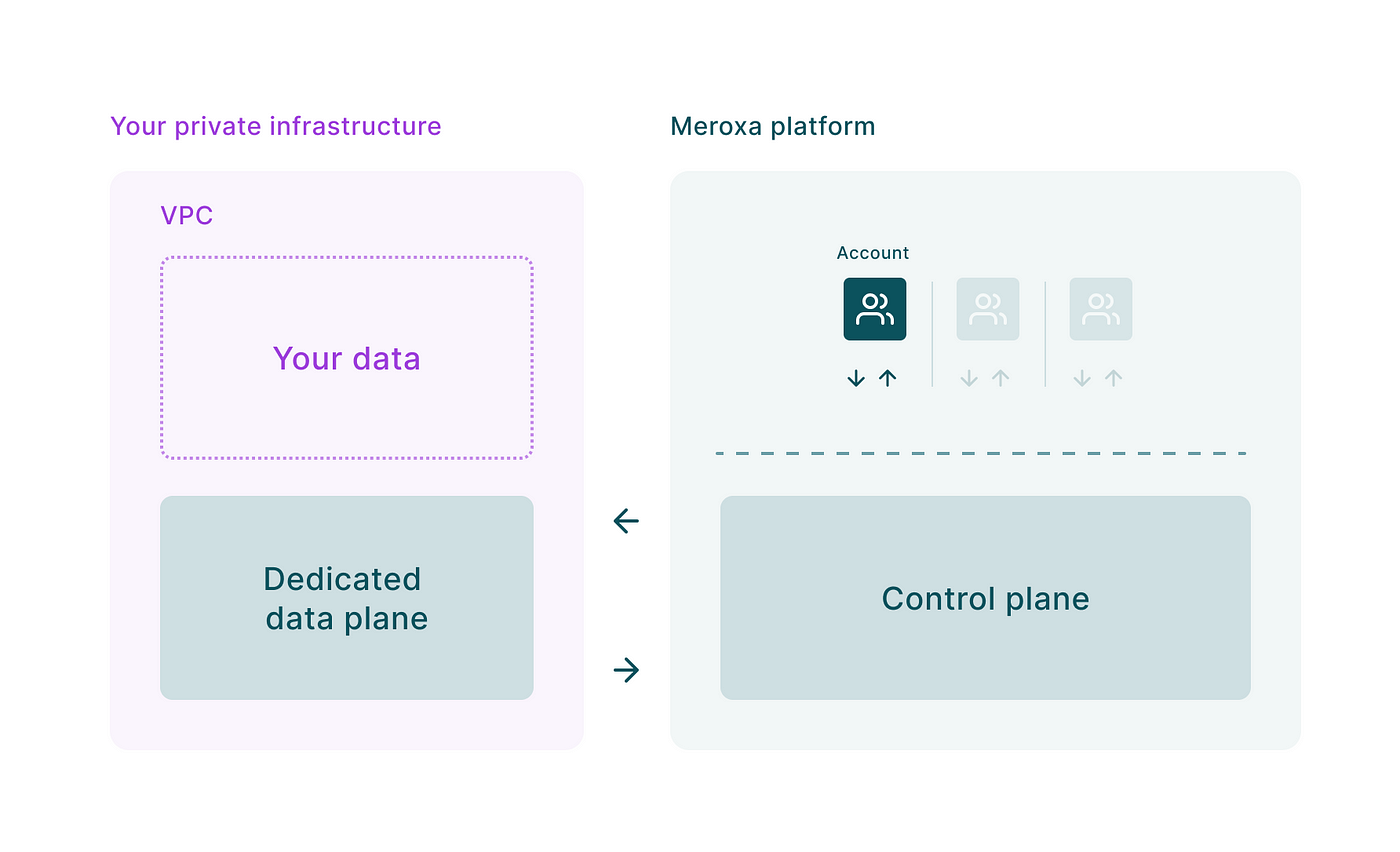

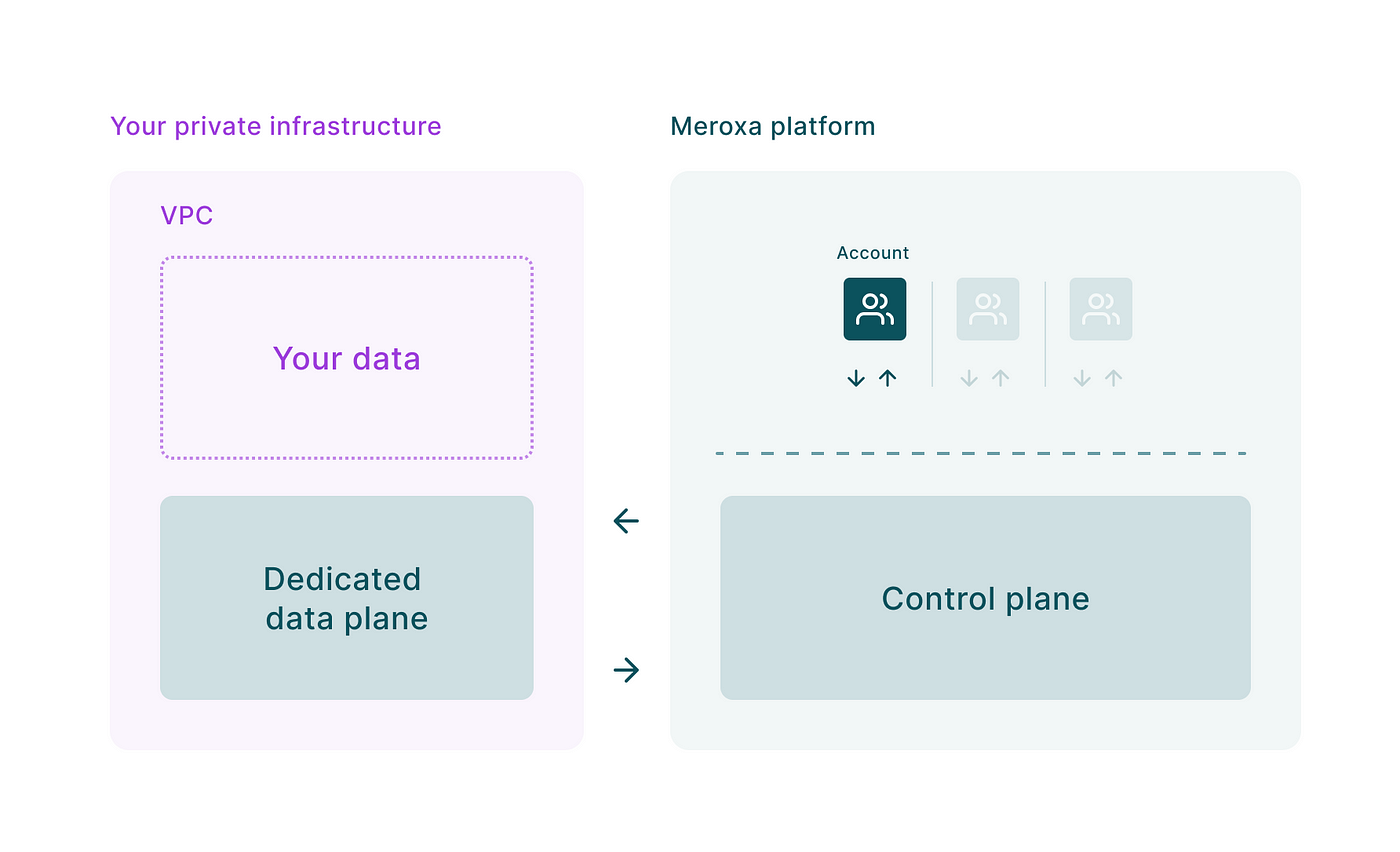

Today, we’re excited to announce the Self-Hosted Environments Beta.

Step-by-step instructions on how to obtain a Meroxa access token. The Meroxa access token is needed to authenticate to the Meroxa API programmatically.

Let’s discuss the use cases of CDC and look at the tools that help you add CDC into your architecture.

Microsoft SQL Server is a powerful, widely used relational database management system. Today, we’re releasing a beta version of our Microsoft SQL Server connector.

Change Data Capture (CDC) is an efficient and scalable model that simplifies the implementation of real-time systems.

With the Meroxa CLI and the Meroxa Dashboard, your pipelines are streaming, real-time, and up and running in minutes, not months.

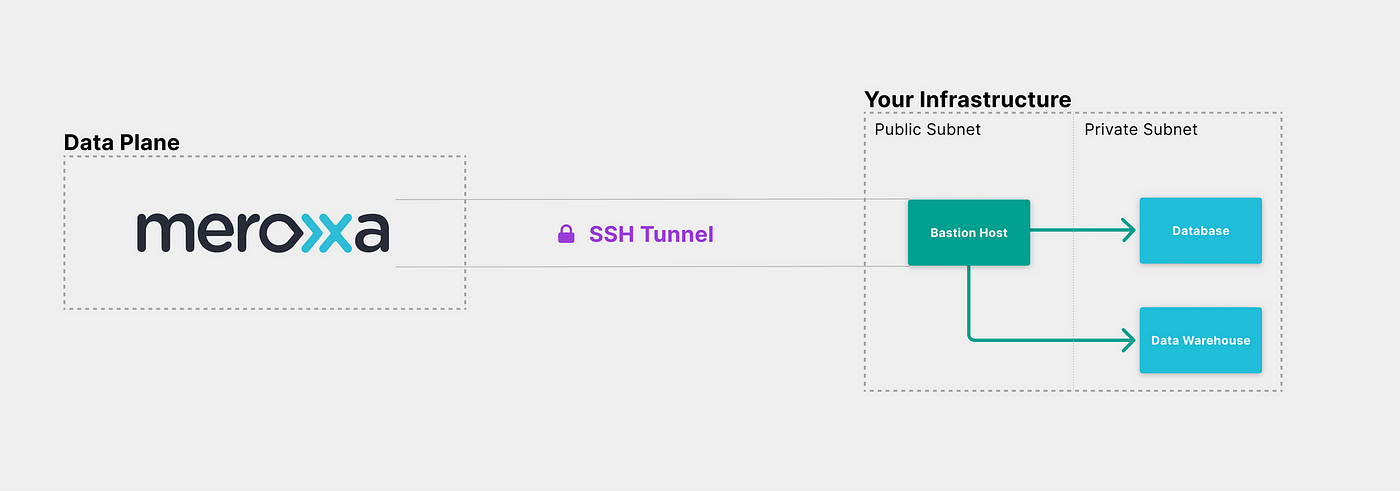

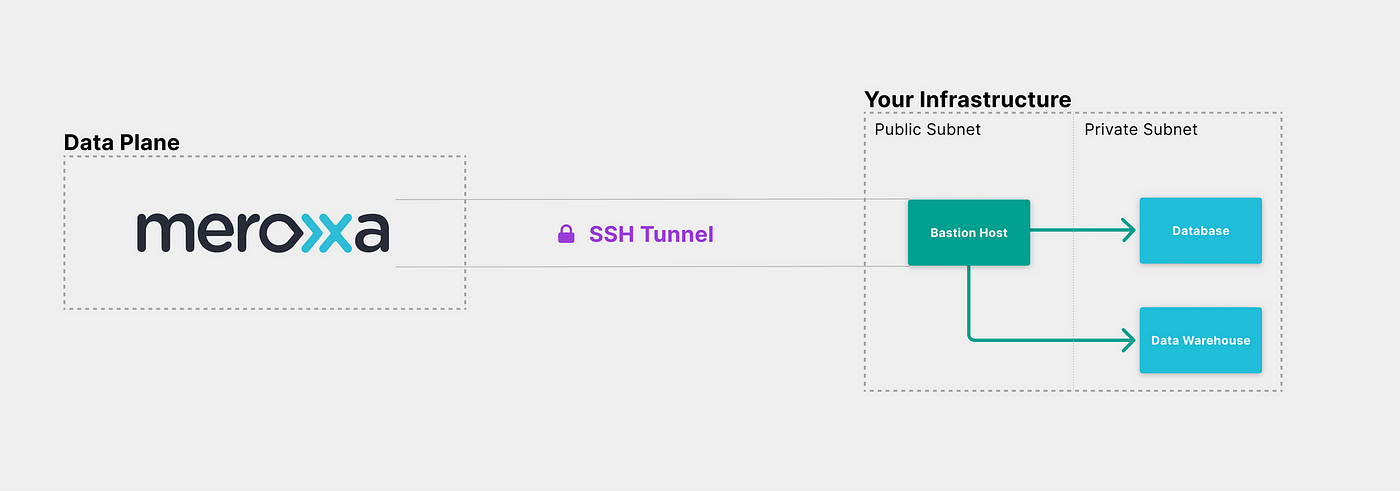

When you build a data pipeline using Meroxa, your data is encrypted in transit and at rest. Today’s platform update adds a new layer of security to Meroxa.

MySQL, one of the most popular open-source databases for developers, is now in public beta as a source and destination for real-time data streams.

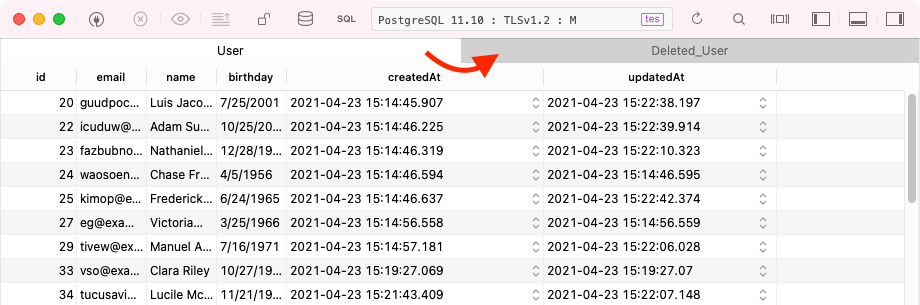

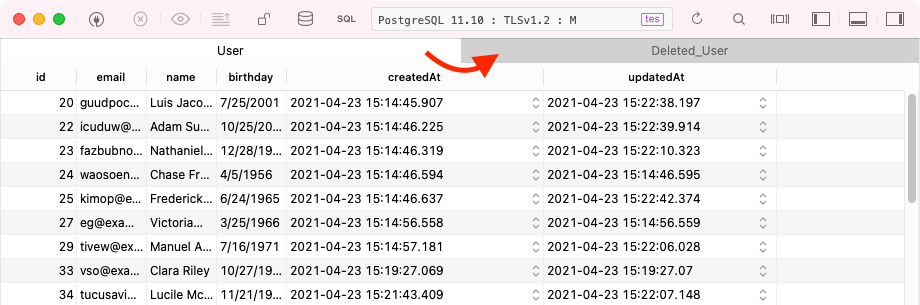

Postgres Triggers and Functions are powerful features that allow you to listen for DELETE operations that occur within a table and insert the deleted row in a separate archive table.

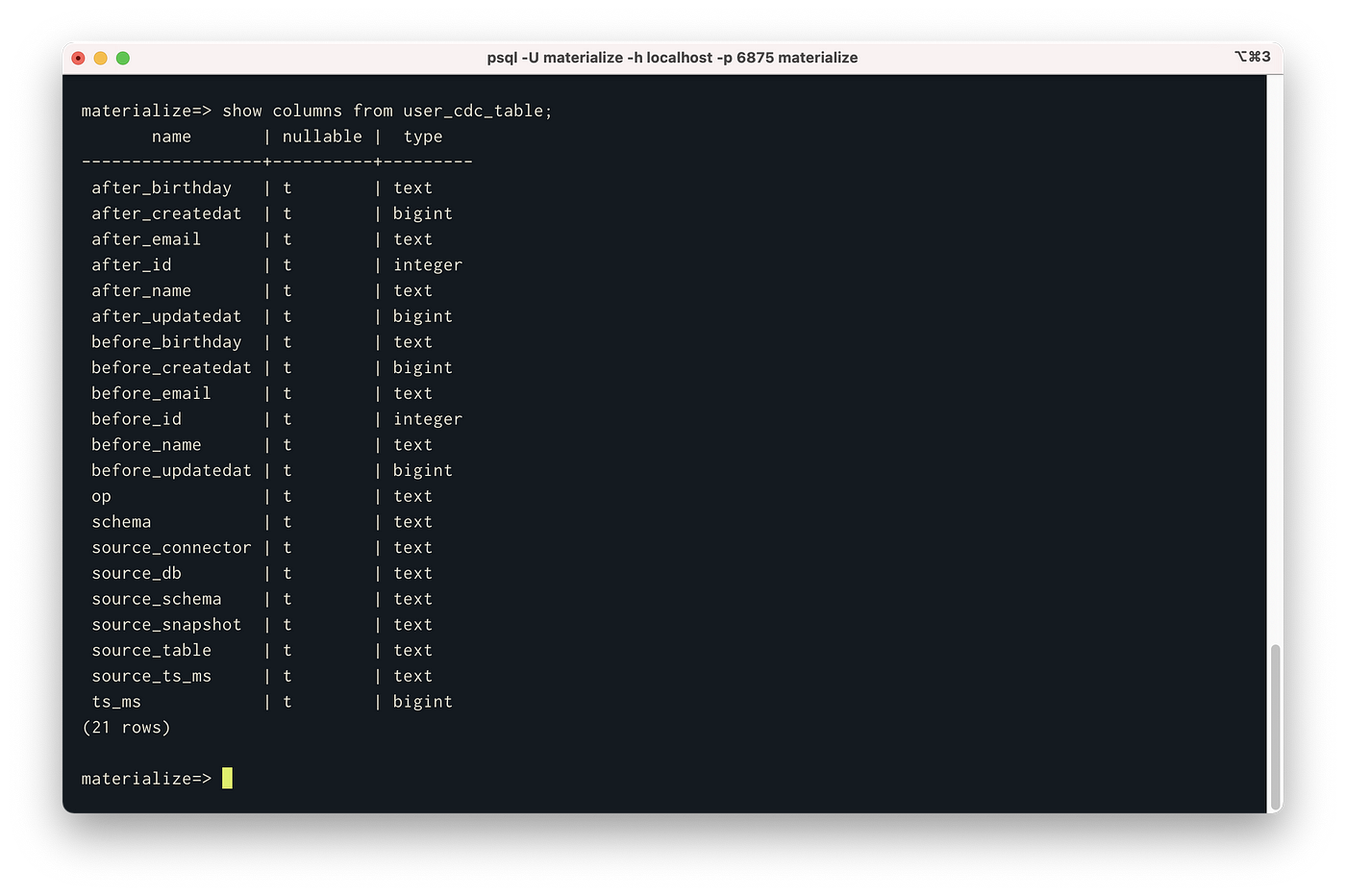

Analyzing the changes that occur to PostgreSQL will give you insight into the current state of the data and allows you to dig into the changes of your database.

“Data is the new oil.” If data is the new oil, we wanted to power the refinery. The merox process, but for data.