Behind every streaming application exists a combination of data and events. With the rising popularity and complexity of event-driven and streaming architectures,data applications offer developers a powerful solution. Data applications are centered around real-time or near real-time events which is key for a lot of modern data processing applications. Today, we are taking an important step towards helping customers build data applications with support for Apache Kafka as a resource on Meroxa.

Apache Kafka is an open-source streaming platform maintained by the Apache Software Foundation and since its creation in 2011, Kafka has evolved from a messaging queue to a robust event streaming platform. Confluent Cloud is a fully managed, cloud-native Kafka service for connecting and processing all of your data, everywhere it’s needed, founded by the original Kafka developers who ran the service at massive scale while at LinkedIn. Apache Kafka and Confluent Cloud can now be added as a resource on the Meroxa Platform with just a few steps. Adding support for producing and consuming Apache Kafka Topics and Streams is only the beginning as we continue to make data apps easier to build for developers.

Getting Started

Kafka is a distributed system consisting of servers and clients that communicate via a high-performance TCP network protocol. In Kafka, a Topic is a category/name used to store and publish records similar to tables in a database. The server that the topics are hosted on is called a Broker and a Cluster typically consists of multiple brokers working together to provide scale and reliability. Bootstrap servers contain the host and port pair that represent the address of the broker.

In the following examples, we will walk you through the steps necessary to add Apache Kafka as a resource on the Meroxa Platform.

Apache Kafka

To connect to Apache Kafka, you need an Apache Kafka server. Refer to Apache Kafka https://kafka.apache.org/quickstart to create one.

Prerequisites

- Bootstrap server information available in the

server.propertiesfile - Username and Password available in the

KafkaServersection in the JAAS file - The Certificate Authority (CA) file, the client certificate, and the client key if Secure Sockets Layer (SSL) encryption is used.

With the information above, you can add Apache Kafka as a resource through the CLI or Dashboard.

Meroxa CLI

In the CLI, use the Meroxa resource create command to configure your Apache Kafka resource.

The following example depicts how this command is used to create an Apache Kafka resource named apachekafka with the minimum configuration required.

$ meroxa resource create apachekafka \

--type kafka \

--url "kafka+sasl+ssl://<USERNAME>:<PASSWORD>@<BOOTSTRAP_SERVER>?sasl_mechanism=plain" \In the example above, replace the following variables with valid credentials from your Apache Kafka environment:

$USERNAME - Apache Kafka Username

$PASSWORD - Apache Kafka Password

$BOOTSTRAP_SERVER - Host and Port of the Kafka brokerFor additional configuration and information on how to add Apache Kafka Resource, check out the Apache Kafka Resource documentation.

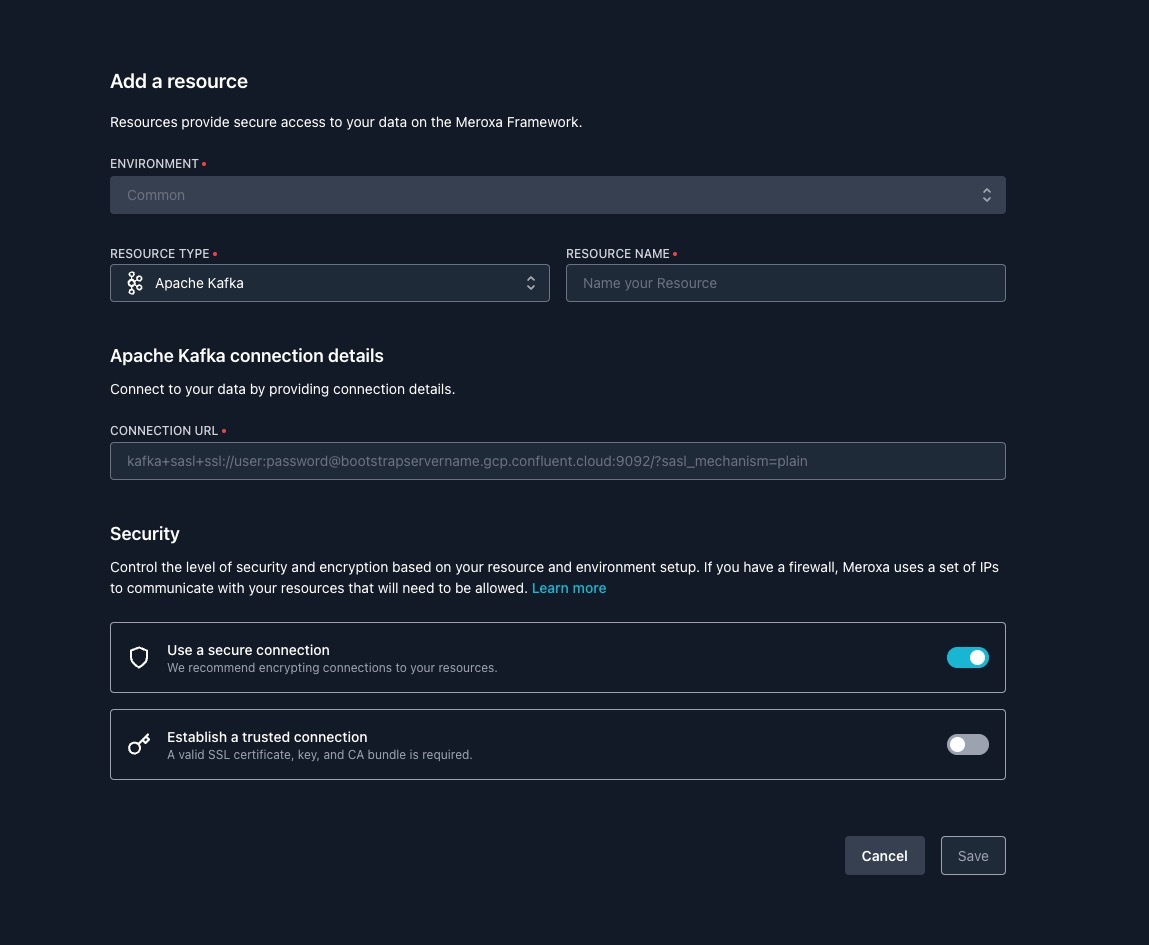

MEROXA DASHBOARD

Combine the username, password, and bootstrap server information to construct a Connection URL in the following format:

kafka+sasl+ssl://<USERNAME>:<PASSWORD>@<BOOTSTRAP_SERVER>?sasl_mechanism=plainIf you’re using Secure Sockets Layer (SSL) encryption then you can toggle the Establish a trusted connection and input The Certificate Authority (CA) file, the client certificate, and the client key.

Confluent Cloud

To connect to Confluent Cloud Apache Kafka, you need to have a Kafka cluster. Refer to Confluent’s quickstart guide to create one.

Prerequisites:

- API key (Follow the [guide](https://docs.confluent.io/cloud/current/get-started/cloud-basics.html#create-keys-for-a-cluster](https://docs.confluent.io/cloud/current/get-started/cloud-basics.html#create-keys-for-a-cluster) to set up your API keys.)

- API secret (this can be found with API key)

- Bootstrap Server (Refer to your [Cluster settings](https://docs.confluent.io/cloud/current/get-started/cloud-basics.html#view-cluster-details](https://docs.confluent.io/cloud/current/get-started/cloud-basics.html#view-cluster-details) to retrieve the Bootstrap Server. )

With the information above, you can add Apache Kafka as a resource through the CLI or Dashboard.

CLI

Use the meroxa resource create command to configure your Confluent Cloud resource.

The following example depicts how this command is used to create a Confluent Cloud resource named confluentcloud with the minimum configuration required.

$ meroxa resource create confluentcloud \

--type confluentcloud \

--url "kafka+sasl+ssl://<API_KEY>:<API_SECRET>@<BOOTSTRAP_SERVER>?sasl_mechanism=plain" \In the example above, replace the following variables with valid credentials from your Confluent Cloud Cloud Console:

$API_KEY - Cluster API Key

$API_SECRET - Cluster API Secret

$BOOTSTRAP_SERVER - Host and Port of the ClusterFor additional information on how to add Confluent Cloud Resource, check out the Confluent Cloud Resource documentation.

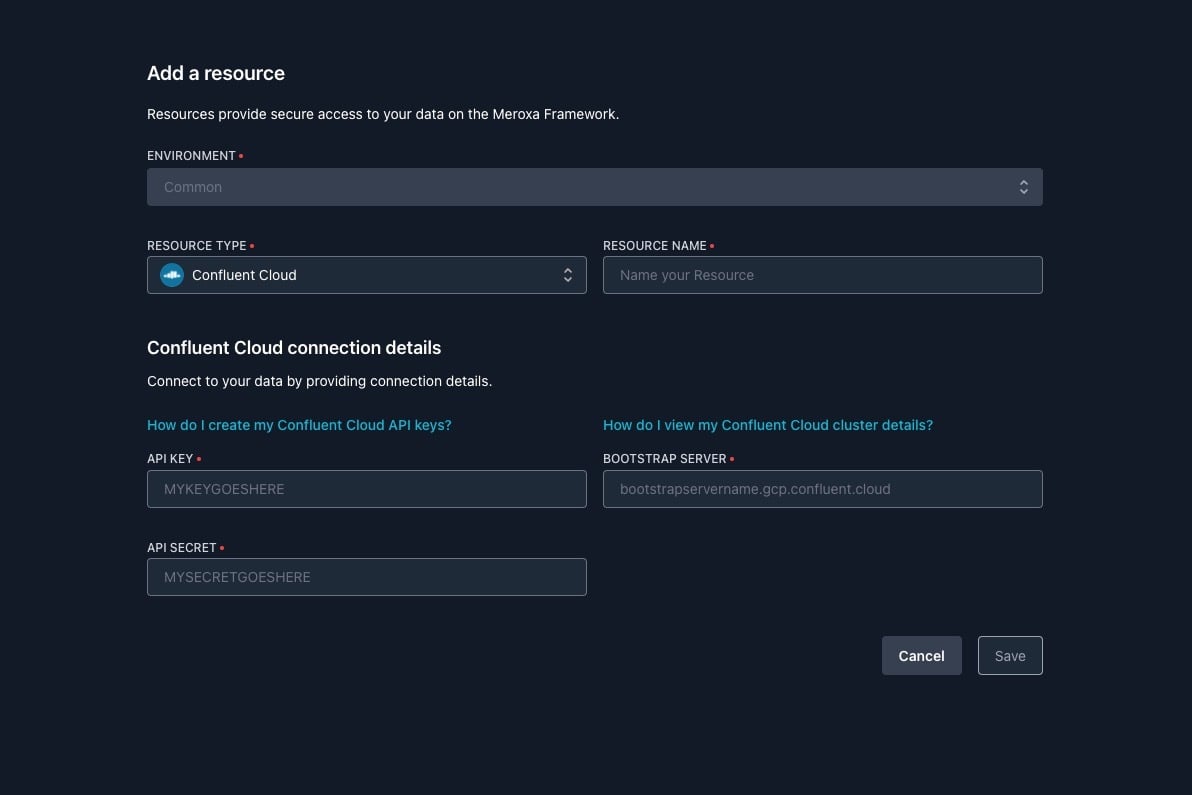

DASHBOARD

Input the API Key, API Secret, and Bootstrap Server information into the corresponding fields to add a Confluent Cloud Kafka resource.

Things to know

- With Kafka, you can pick a data format of your choice. It’s important to be consistent across your usage when using Kafka upstream to any downstream resources. Currently, Meroxa only supports JSON.

- Meroxa uses SASL/PLAIN configuration to authenticate with Kafka. SASL/PLAIN is a simple username/password authentication mechanism that is typically used with TLS for encryption to implement secure authentication)

Have questions or feedback?

If you have questions or feedback, reach out directly by joining our community or by writing to support@meroxa.com.

We can’t wait to see what you build! 🚀