As the CEO of Meroxa, I've had a front-row seat to the AI revolution sweeping through the enterprise technology. Companies that just came to grips with having to become a data company are now scrambling to leverage AI to optimize huge parts of their business. While large language models (LLMs) like GPT-4, Claude, Llama, and Gemini have captured the public imagination, I'm increasingly convinced that the future of practical AI applications lies in a different direction: tiny, specialized language models powered by real-time data streams.

The Hidden Costs of Large Language Models

Let's be frank: LLMs are impressive, but they come with significant drawbacks. Training these models requires massive computational resources, with costs running into millions of dollars. They consume enormous amounts of energy, making them environmentally questionable. And despite their size, they still struggle with hallucinations – those confident but incorrect responses that can wreak havoc in business applications.

But perhaps most importantly, LLMs are fundamentally disconnected from your business's current reality. They're trained on historical internet data, not your organization's live, operational data. This disconnect creates a critical gap between AI capabilities and business needs.

The Tiny Model Advantage

This is where tiny language models shine. By "tiny," I mean models that are:

- Trained on specific domains rather than attempting to know everything

- Updated continuously with real-time data streams

- Optimized for specific business tasks rather than general-purpose conversation

The advantages are compelling:

1. Reduced Hallucinations Through Real-Time Data

Tiny models trained on current, streaming data are less likely to hallucinate because they're working with fresh, relevant information. When your model is continuously updated with real-time data from your actual business operations, it doesn't need to "fill in the gaps" with potentially incorrect information.

2. Dramatic Cost Reduction

The economics are straightforward. Training a tiny model on a specific domain requires:

- Significantly less computational power

- Smaller training datasets

- Shorter training times

- Lower ongoing operational costs

We've seen organizations reduce their AI training costs by 90% or more by switching to domain-specific tiny models.

3. Improved Relevancy and Accuracy

When your model is focused on a specific domain and continuously updated with real-time data, it becomes remarkably accurate within its scope. Instead of being "okay" at everything, it becomes excellent at what matters to your business.

Real-World Applications

Consider a few scenarios where tiny models excel:

Customer Support: Instead of using a general-purpose LLM, deploy a tiny model trained specifically on your product documentation, support tickets, and real-time customer interactions. The model stays current with product updates and emerging issues.

Financial Services: Rather than relying on an LLM's outdated knowledge, use a tiny model that continuously learns from market data, transaction patterns, and regulatory updates.

Supply Chain Operations: Deploy models that understand your specific inventory, logistics, and supplier relationships, updated in real-time as conditions change.

The Hybrid Approach

This isn't to say that LLMs don't have their place. A hybrid approach often works best:

- Use LLMs for broad, creative tasks where general knowledge is valuable

- Deploy tiny models for specific, business-critical operations where accuracy and currentness are paramount

- Leverage both in combination where appropriate

The Critical Role of Data Streams

Here's where the rubber meets the road: tiny models are only as good as the data they're trained on. The key to success is having robust, reliable data streams that can:

- Capture real-time business events

- Clean and prepare data automatically

- Feed models continuously for training and updates

This is why at Meroxa, we've focused on building the infrastructure that makes this possible. Our platform enables organizations to create and manage the real-time data streams that power these next-generation AI systems.

Reference Architecture

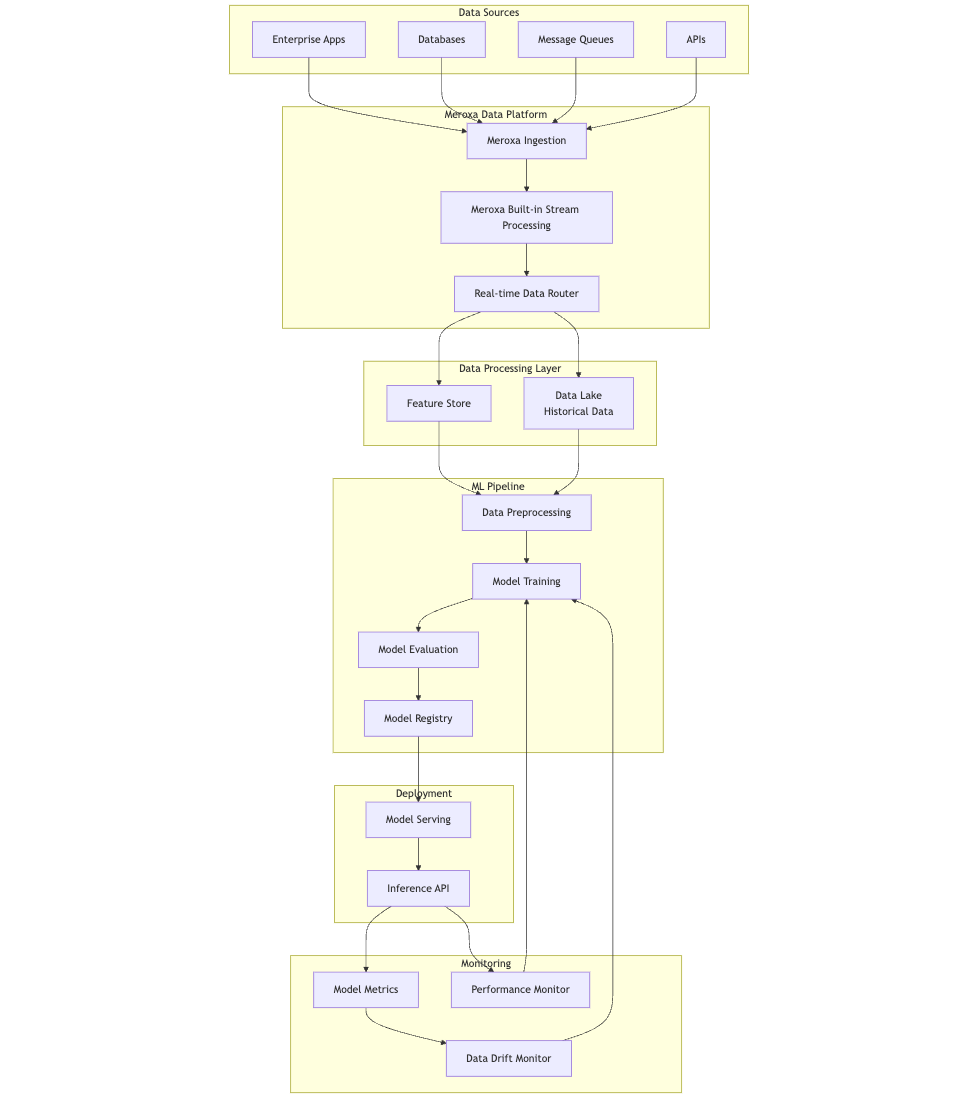

To make this concrete, let's look at a reference architecture for implementing tiny language models with real-time data streams:

This architecture shows how Meroxa serves as the foundation for real-time data processing that powers tiny language models. Let's break down the key components:

- Data Ingestion: Meroxa handles real-time data capture from various sources, ensuring no valuable information is lost.

- Stream Processing: Our Turbine engine processes and transforms data in real-time, preparing it for model consumption.

- Data Storage: A multi-tiered approach combines historical data for training with hot data for real-time inference.

- ML Pipeline: Continuous training and evaluation ensure models stay current and accurate.

- Monitoring: Comprehensive monitoring helps detect data drift and trigger model updates when needed.

The beauty of this architecture is its ability to maintain model freshness while managing computational resources efficiently.

Getting Started

The path to implementing tiny models in your organization starts with your data infrastructure. Here's what you need:

- Identify the specific domains where AI could add value

- Map out your data sources and streams

- Set up real-time data pipelines (this is where Meroxa comes in)

- Start small with a focused model in one domain

- Measure results and iterate

The Path Forward

As AI continues to evolve, the winners won't be those with the biggest models, but those with the most relevant ones. The combination of tiny models and real-time data streams represents a more sustainable, efficient, and effective approach to enterprise AI.

Ready to explore how tiny models could transform your organization? Let's talk about how Meroxa can help you build the real-time data infrastructure that makes it possible. Sign up