In today's fast-paced business world, staying competitive and agile is more important than ever. Real-time analytics have become essential for companies looking to keep up with rapid changes and make informed decisions quickly. These analytics provide immediate insights into business operations, customer behavior, and market trends, enabling organizations to respond with speed and precision.

However, while there are many tools available for querying and analyzing data, getting that data into a format that’s easy to work with can still be a major hurdle. Many teams find themselves juggling multiple vendors to handle data ingestion, cleansing, augmentation, orchestration, streaming, and storage. This can be both costly and complicated.

In this post, we’ll guide you through how to simplify this process using Meroxa’s Conduit Platform. We’ll show you how to build real-time analytics dashboards by pulling data from Postgres, processing it with Clickhouse, and displaying the results in Grafana. This approach streamlines your data pipeline, making it easier and more efficient to gain the insights your business needs.

Why Are Teams Choosing Clickhouse for Analytics?

ClickHouse has established itself as a prominent database for analytical applications due to its technical advantages over competitors like Druid, Pinot, and StarRocks. Its columnar storage engine and vectorized query execution enable efficient data compression and parallel processing, resulting in superior query performance on large datasets. ClickHouse's architecture supports both batch and streaming data ingestion, offering flexibility that surpasses Druid and Pinot, which are optimized for specific workloads. The database's ACID compliance and support for materialized views further enhance its capabilities for real-time analytics.

Compared to Druid, ClickHouse offers a more comprehensive SQL support and a simpler architecture, reducing operational complexity. While Pinot excels in low-latency queries, ClickHouse provides better write throughput and more extensive analytical functions. StarRocks, though competitive, lacks the maturity and extensive ecosystem of ClickHouse. ClickHouse's ability to handle diverse data models, including nested structures, and its support for various index types (e.g., skip indexes, primary key indexes) contribute to its versatility.

Furthermore, ClickHouse's distributed architecture allows for horizontal scaling, enabling it to process petabytes of data across clusters. Its support for approximate query processing techniques, like reservoir sampling and HyperLogLog, facilitates efficient analytics on massive datasets. These technical features, combined with its active open-source community and growing ecosystem of tools, position ClickHouse as a robust choice for building scalable and high-performance analytical applications.

Step-by-Step Guide to Setting Up a PostgreSQL to ClickHouse Pipeline Using Meroxa Conduit

-

Download Conduit Binary: Follow the Conduit Quickstart to download and install the Conduit binary on your local machine.

-

Download & Install Connectors:

- PostgreSQL Connector: Conduit PostgreSQL Connector

- ClickHouse Connector: Conduit ClickHouse Connector

Refer to Installing Connectors for more information.

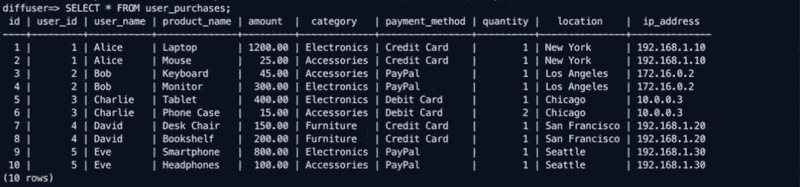

Example PostgreSQL table with user purchases data.

-

Provision Secrets for PostgreSQL and ClickHouse:

- PostgreSQL Connection String: postgres://

: @ : / - ClickHouse Connection String: https://

: @ : / ?secure=true

- PostgreSQL Connection String: postgres://

-

Set Up Your Conduit Pipeline YAML:

-

Example YAML Configuration:

version: 2.2 pipelines: - id: pg-to-ch status: running description: > This pipeline showcases real-time data streaming from Postgres to ClickHouse. connectors: # [CONNECTOR] SOURCE - id: pg-source type: source plugin: standalone:postgres settings: url: "postgres://yourusername:yourpassword@yourhost:5432/yourdatabase" tables: "user_purchases" # [CONNECTOR] DESTINATION - id: clickhouse-sink type: destination plugin: standalone:clickhouse settings: url: "https://yourusername:yourpassword@yourhost:8443/yourdatabase?secure=true" table: "user_purchases" keyColumns: "id"

-

Explanation of YAML Components:

• pipelines: Defines the pipeline configuration.

• id: Unique identifier for the pipeline.

• status: Defines the pipeline’s status (running/stopped).

• description: A brief description of the pipeline’s purpose.

• Connectors:

• Source Connector (pg-source):

• type: Specifies the connector type (source).

• plugin: The plugin type (PostgreSQL).

• settings: Contains the PostgreSQL connection settings.

• Destination Connector (clickhouse-sink):

• type: Specifies the connector type (destination).

• plugin: The plugin type (ClickHouse).

• settings: Contains the ClickHouse connection settings.

- Run the Pipeline:

- Navigate to the directory containing your YAML file. Simply run Conduit by using the following command:

./conduit

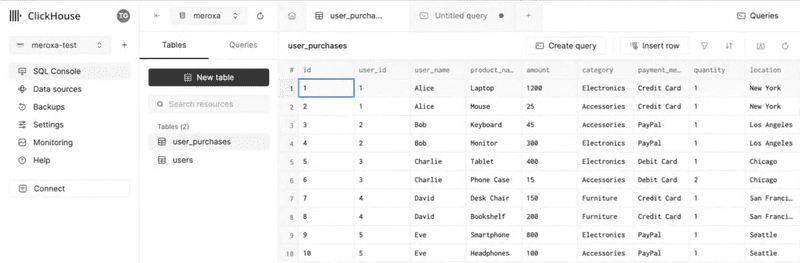

Example data being transferred from PostgreSQL into Clickhouse.

This will automatically execute any pipeline files located in the ./pipelines directory.

Access the Conduit UI at localhost:8080 to monitor and manage the pipeline.

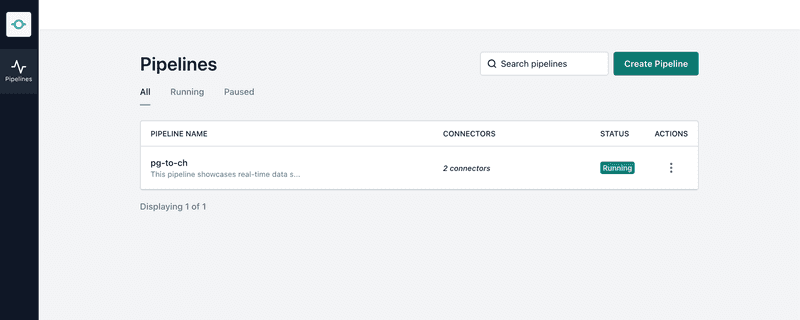

Conduit UI showcasing current pipelines running. You can have as many pipelines you want here.

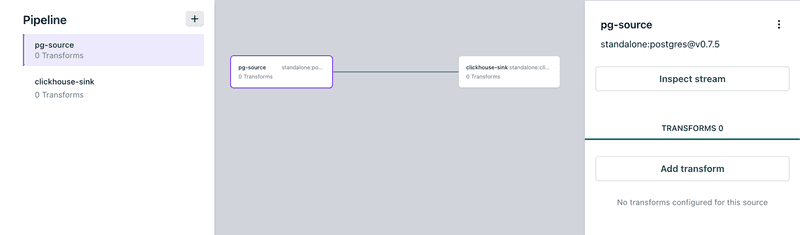

Example Conduit pipeline showcasing the connectors used and ability to inspect the data stream.

Use Cases for PostgreSQL to ClickHouse CDC

Financial Services: Real-time fraud detection and transaction monitoring become seamless with up-to-date data flowing from PostgreSQL to ClickHouse.

E-commerce: Enhance customer experience by providing real-time product recommendations and personalized marketing based on the latest data.

IoT Applications: Process and analyze massive streams of IoT data in real time, enabling predictive maintenance and operational efficiency.

Best Practices for Implementing CDC with Meroxa

- Plan Your Data Flow: Understand your data sources and destinations, and plan the flow of data to ensure optimal performance.

- Automate Data Transformations: Use Meroxa’s transformation features to automate data cleaning and preparation, ensuring high-quality data in ClickHouse.

- Monitor Continuously: Regularly monitor your CDC pipelines to identify and resolve any issues promptly, ensuring uninterrupted data flow.

Conclusion

Implementing PostgreSQL to ClickHouse CDC with Meroxa's Conduit Platform provides a powerful solution for real-time data integration and analytics. By leveraging Meroxa's robust platform, businesses can ensure data consistency, scalability, and ease of use, empowering them to make data-driven decisions with confidence. Stay tuned for part 2 where we show you how to stream data from MongoDB into ClickHouse.

Ready to transform your data integration process? Request a demo of Meroxa's Conduit Platform today and see how you can seamlessly integrate PostgreSQL with ClickHouse for real-time analytics and insights. Check out the full demo video