Modern AI applications demand real-time data to be truly effective. Whether you're building intelligent customer support systems, dynamic recommendation engines, or adaptive fraud detection models, the value of AI diminishes rapidly as data ages. In many cases, the difference between real-time and batch processing isn't just about speed—it's about relevance, accuracy, and competitive advantage.

Conduit 0.13.5 has introduced a new builtin processor, the Ollama processor. This processor provides the capability to enhance data in their conduit pipelines by sending a prompt to a specified large language model(LLM).

Build a real-time incident response system using Kafka, Conduit, and CrewAI. Stream alerts, trigger AI agents, and post to Slack—all autonomously.

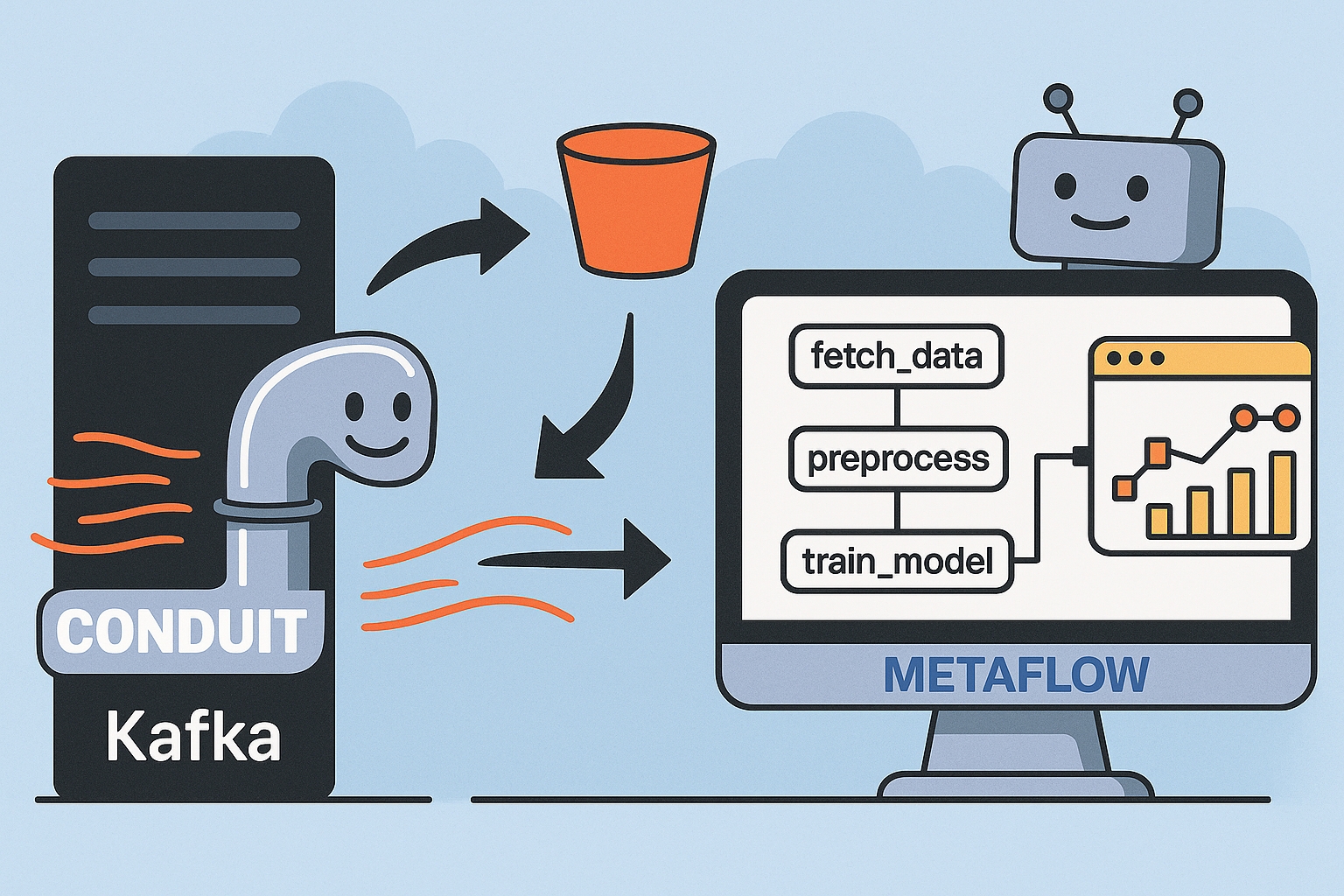

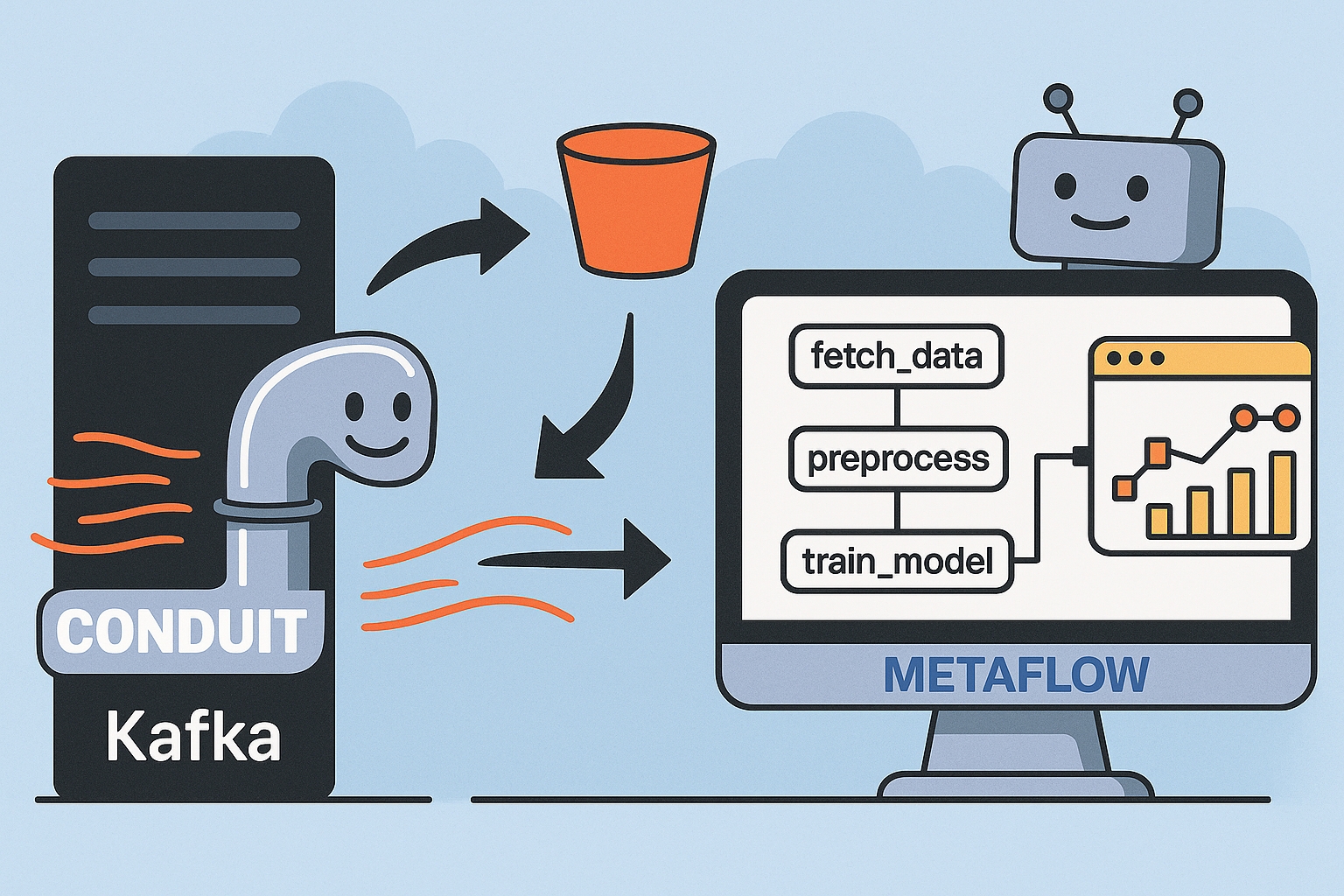

Learn how to integrate Conduit with Metaflow to build real-time machine learning pipelines. Stream data from Kafka to S3 and process it with Metaflow—all in one seamless workflow.

If you're building streaming pipelines and care about efficiency, scalability, and cost, Conduit isn't just an alternative to Kafka Connect, it's the better tool for the job.

Learn how Meroxa achieved a 73.8% cost reduction by replacing Kafka Connect with Conduit, their open-source data integration engine. Discover how they improved memory usage from 1.5GB to 100MB per connector, reduced startup times from 30-60s to ~1s, and cut monthly compute costs from $45K to $12K. This technical deep-dive explores real performance metrics, architectural benefits, and practical implementation details for teams looking to optimize their Kafka infrastructure costs.

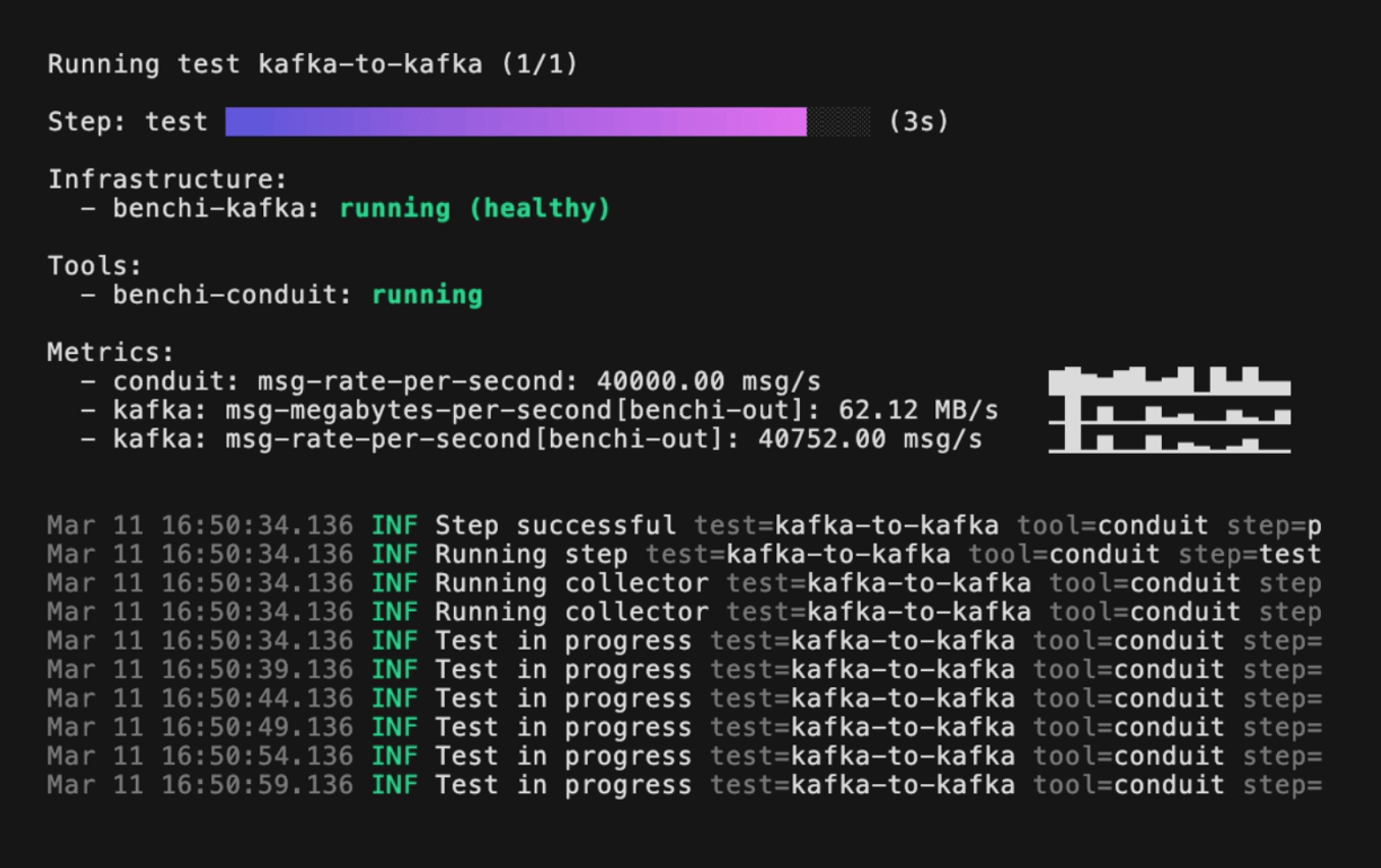

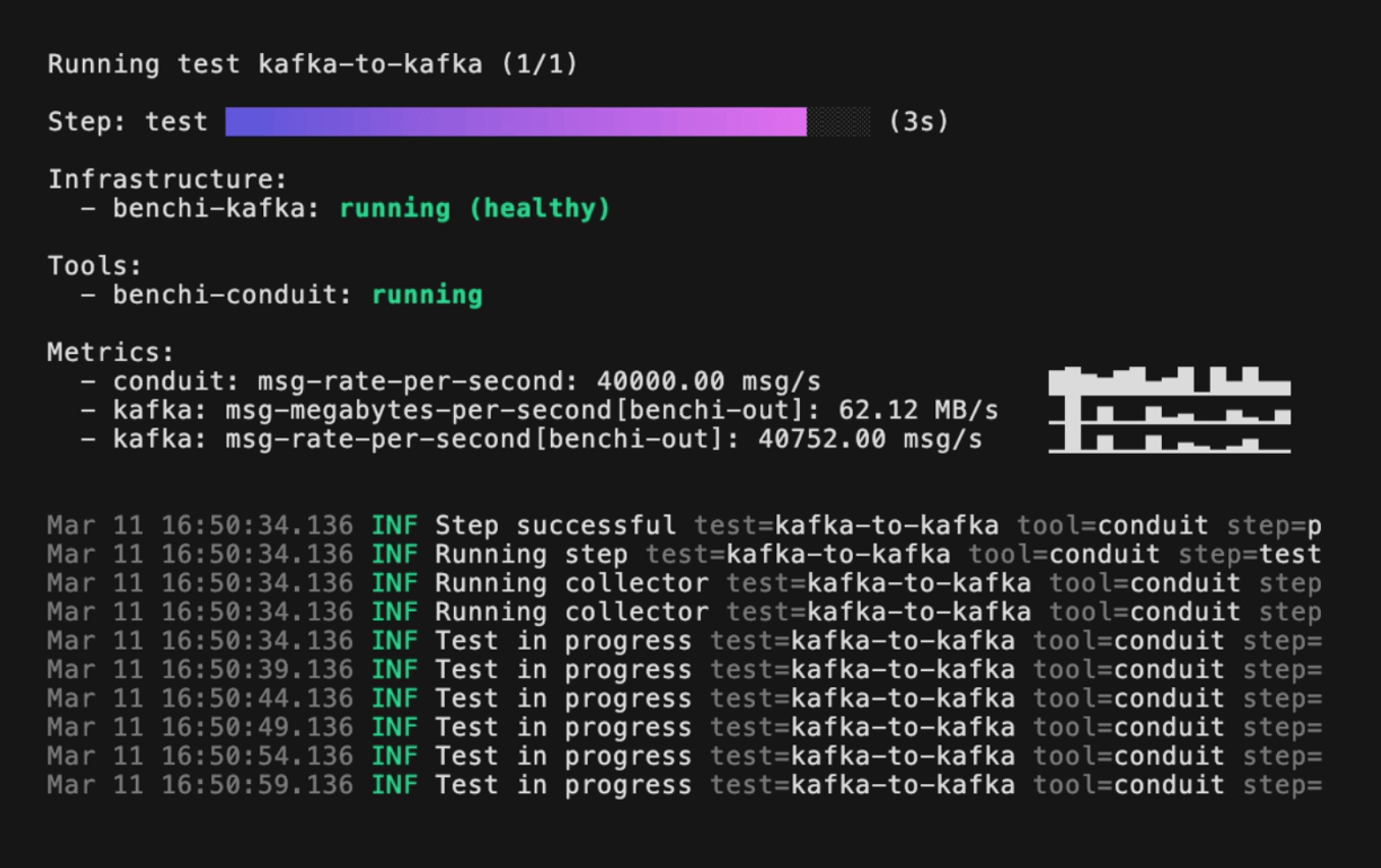

As we’re getting closer to the Conduit 1.0 release, we recently started conducting a series of benchmarks on our most popular connectors. We started with MongoDB and Kafka. In this post we'll talk about our performance findings for Postgres.

The story of how I built a Google Drive destination connector for Conduit from scratch—the things I learned, the hiccups I hit, and why it was totally worth it.

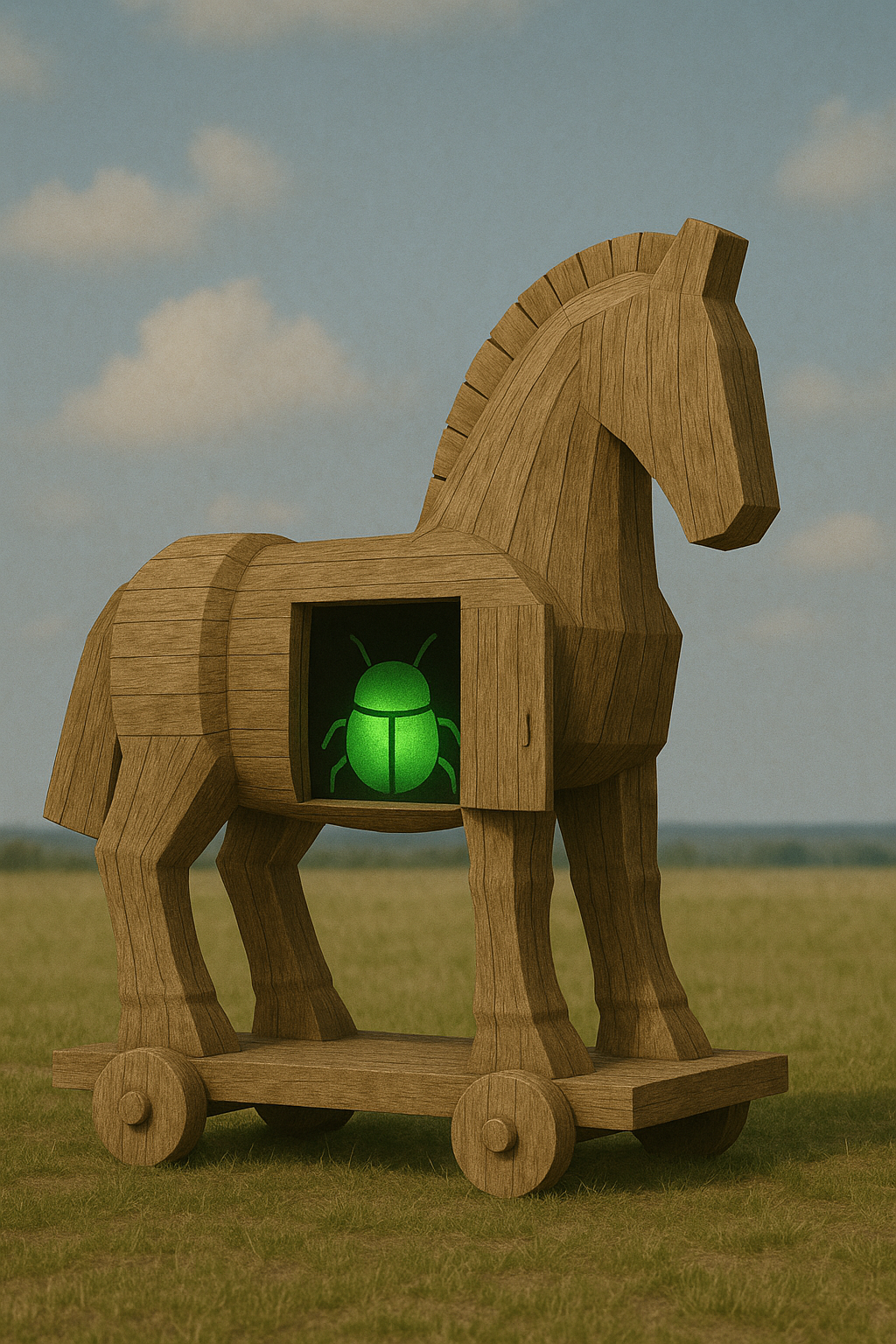

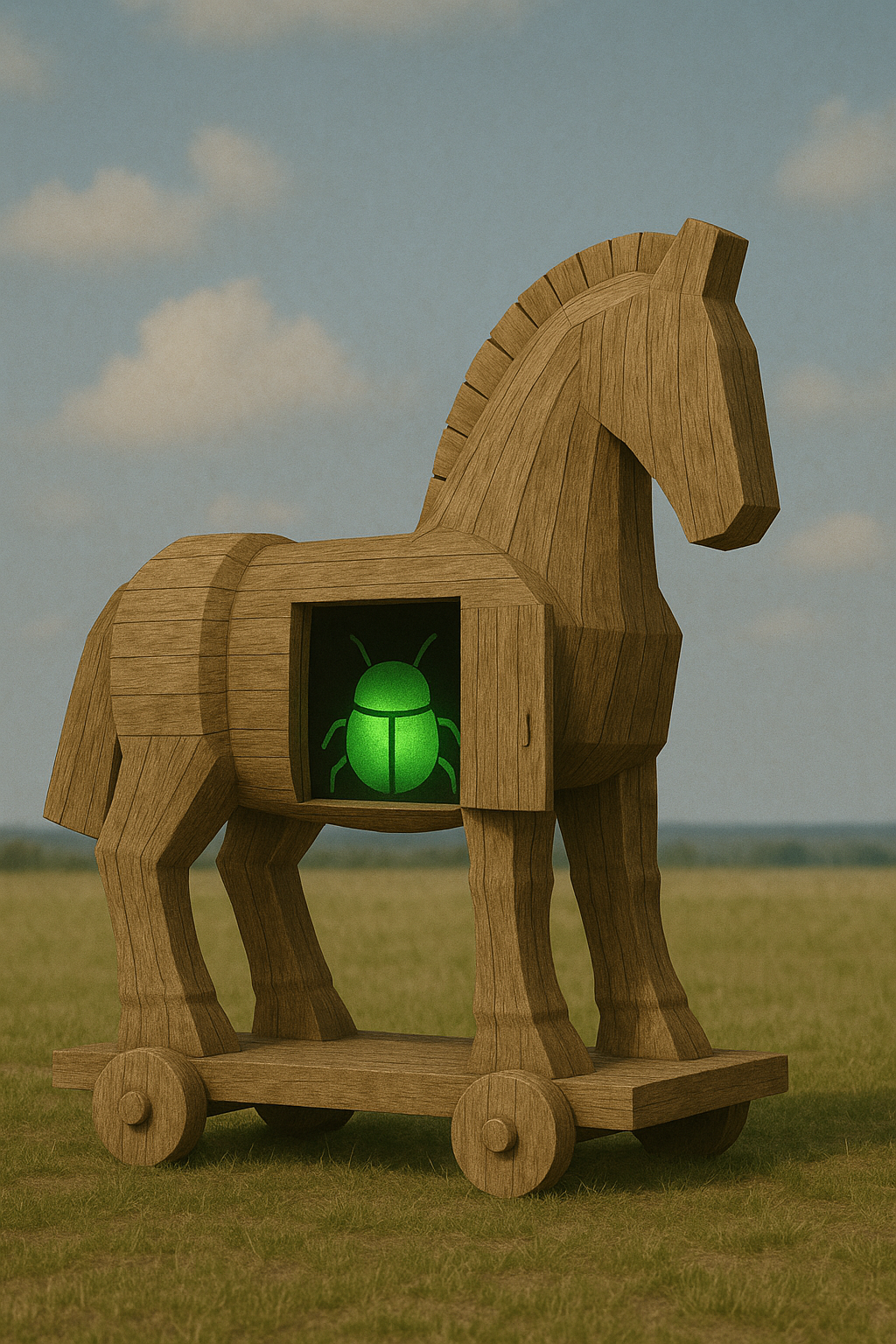

A third-party created a Conduit connector with malicious code, here's how we found it, and got it removed.

In head-to-head testing Conduit performed 52% faster than Kafka Connect in streaming data from MongoDB.

Benchi is a minimal benchmarking framework designed to help you measure the performance of your applications and infrastructure. It leverages Docker to create isolated environments for running benchmarks and collecting metrics.

Discover how **Meroxa, Feast, and Databricks** power **real-time fraud detection** by integrating **streaming data, AI-driven feature stores, and scalable analytics**. This blog explores how businesses can **prevent fraud instantly**, leveraging **real-time data pipelines, machine learning models, and predictive analytics** to enhance security and compliance.

AI projects often fail due to data bottlenecks, infrastructure complexity, and governance challenges. This blog explores how Meroxa helps tech leaders overcome these issues with real-time data movement, automation, and security, ensuring scalable, high-performance AI deployments.

Switching from Java to Go? In this first part of the series, I share my journey transitioning from Java to Go, including key differences, challenges, and lessons learned. From implicit interfaces to error handling, multiple return values, and no function overloading—discover what makes Go unique and how to navigate the switch effectively. Whether you're considering Go or already making the move, this guide will help you adapt to Go’s simplicity and efficiency.

This blog explores how real-time data pipelines can cut fraud losses by 60%, reduce compliance costs by 50%, and drive multi-million dollar savings. Learn how fintech CTOs can leverage modern data architectures for sub-millisecond transaction processing, AI-driven risk management, and scalable infrastructure to future-proof their financial operations.

This blog explores how low-latency inference, hardware acceleration, and agile real-time data pipelines drive faster, smarter decision-making. Learn how Meroxa empowers organizations with seamless data ingestion, real-time analytics, and scalable infrastructure to bridge the gap between edge computing and actionable insights—unlocking the full potential of AI-driven innovation.

This blog explores how enterprises can leverage LLMs to extract instant value from continuous data streams and how conversational analytics is reshaping business intelligence. From financial services to retail, AI-powered data workflows are driving faster, smarter decisions—unlocking efficiency, scalability, and a strategic edge in a data-driven world.

As we mark the 3-year anniversary of Conduit Platform, we reflect on the incredible journey of innovation, scalability, and real-time data movement. Over the past three years, Conduit has transformed the way developers and data professionals build pipelines, enabling seamless data integration across diverse sources.

In this blog, we’ll look back at our biggest milestones, customer success stories, and the groundbreaking features that set Conduit apart. From powering real-time analytics to AI-driven data processing, Conduit has continued to push the boundaries of what’s possible in modern data infrastructure.

The latest Conduit 0.13 release brings significant upgrades, focusing on developer experience, automation, and performance optimization. Key highlights include automated documentation synchronization for connectors, a powerful new CLI for seamless pipeline and connector management, and 5x improvements in output processing speed, reducing latency and boosting efficiency. Notably, this version also deprecates the built-in UI, reinforcing Conduit’s commitment to a CLI-driven workflow. With expanded CLI capabilities and automated documentation tools, developers can now manage data pipelines more efficiently than ever. Upgrade today to leverage these new features and maximize your real-time data processing capabilities! 🚀

The Conduit 0.13 release introduces `connector.yaml`, a powerful automation tool that ensures connector documentation stays up to date with the latest configuration changes. By centralizing connector metadata—such as parameters, descriptions, and validation rules—developers can seamlessly sync documentation across repositories and the official Conduit docs. With the `conn-sdk-cli` tool, updating documentation is as simple as running a command, eliminating manual updates and reducing errors. This release enhances the developer experience, improves documentation consistency, and streamlines real-time data pipeline management. Start using `connector.yaml` today and automate your documentation workflow! 🚀

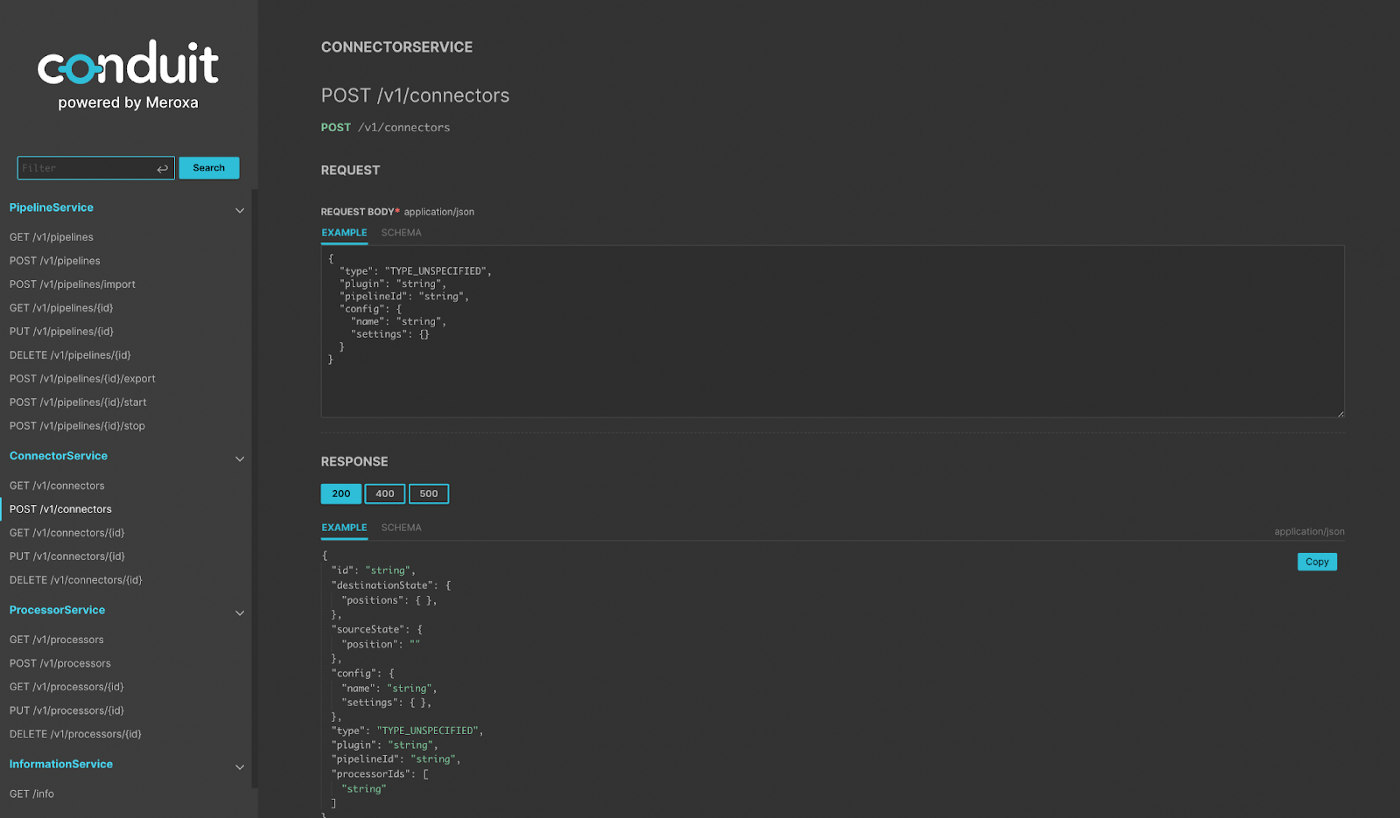

The Conduit 0.13 release introduces a powerful new CLI that simplifies real-time data pipeline management. With intuitive commands, developers can configure, monitor, and run pipelines directly from the terminal—eliminating complex API calls and manual configurations. Optimized for speed and efficiency, the CLI enhances deployment, troubleshooting, and high-throughput data streaming. Upgrade to Conduit 0.13 and streamline your data workflows today! Try it now with `$ conduit --help`. 🚀

At Meroxa, we’re empowering developers with Conduit OSS, a tool that simplifies real-time data engineering. With our latest release of new connectors, integrating with popular platforms is seamless, accelerating development and delivering real-time data insights. Here's a look at what's available now and what's coming next!

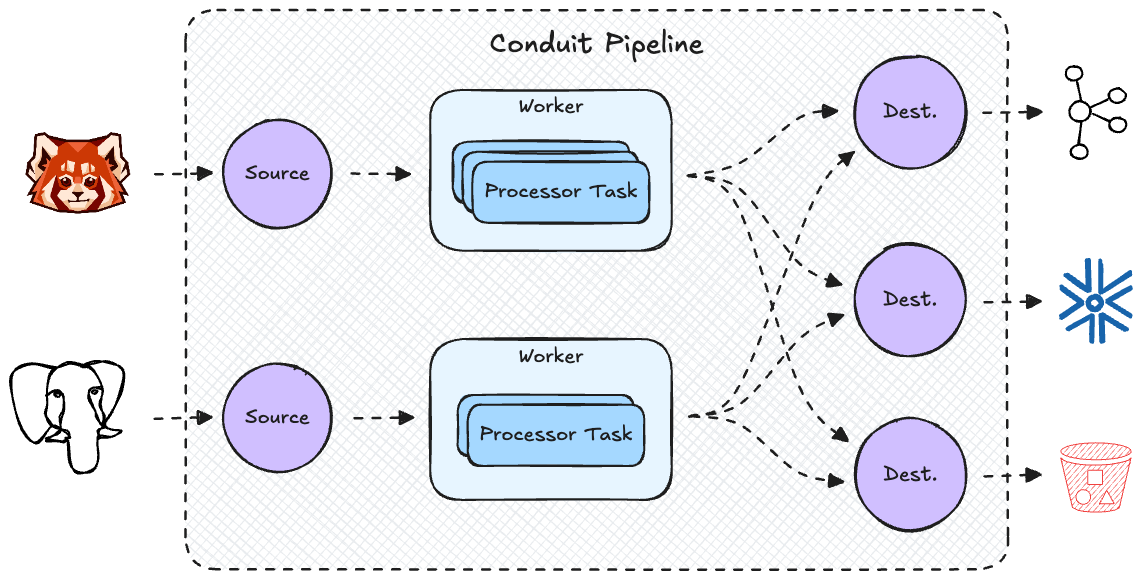

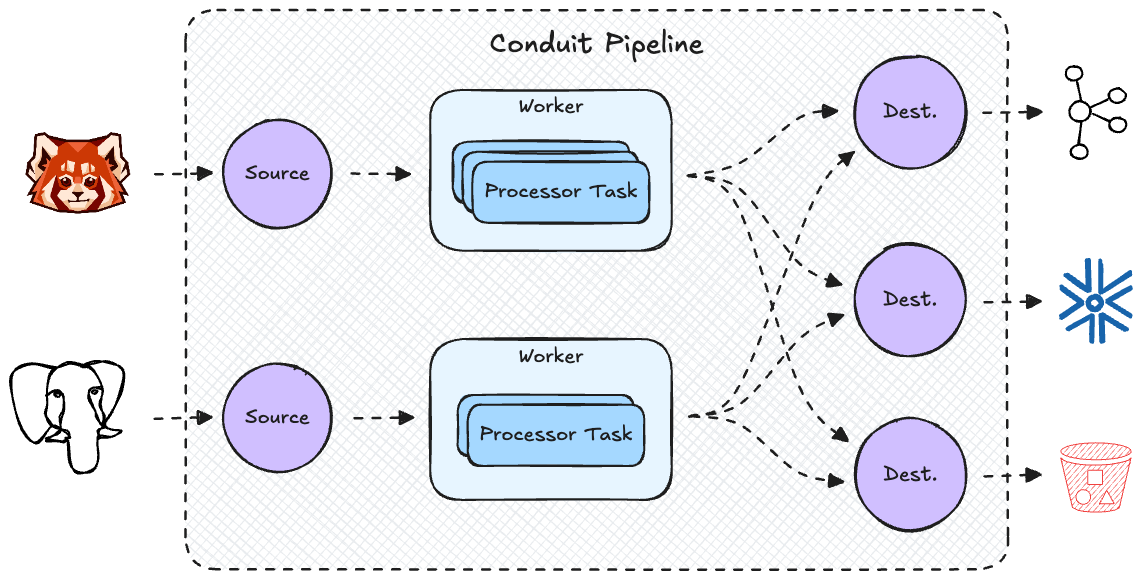

In this blog, we dive into how Conduit transitioned from a flexible but slower architecture to a high-performance streaming engine. We explore the limitations of the old DAG-based design, its impact on ordering guarantees and backpressure, and why switching to a Worker-Task Model drastically improved throughput and efficiency. With real-world performance benchmarks showing a 5x increase in message throughput, this blog is a must-read for developers and data engineers looking to optimize their data pipelines. Learn how Conduit is setting a new standard for real-time data movement and how you can leverage these improvements today! 🚀

Whether you’re building recommendation engines, fraud detection systems, or dynamic pricing models, real-time data powers smarter and faster decisions. For Databricks users, the platform already excels at advanced analytics and AI. Pair it with Meroxa’s seamless, cost-effective real-time data ingestion and transformation capabilities to unlock the full potential of real-time AI workflows. Together, Meroxa and Databricks simplify complex streaming architectures, enabling accurate insights and faster business outcomes without the usual headaches or costs.

Discover how CTOs can tackle fragmented data, scalability, compliance, and vendor lock-in challenges. This blog highlights how Meroxa’s Conduit Platform enables real-time data integration, optimized costs, and innovation-ready architectures to future-proof your enterprise.

This blog provides a comprehensive, beginner-friendly guide to building your first real-time data pipeline using Meroxa’s open-source Conduit platform. Designed to simplify real-time data integration, Conduit empowers developers to move data seamlessly between systems with minimal setup. The guide walks readers through the installation, initialization, pipeline configuration, and execution steps. Starting with a sample pipeline that streams generated data to a destination file, it showcases Conduit’s intuitive configuration process and highlights the flexibility to handle structured, scalable data in real time. By the end, readers will have a fully functioning pipeline ready to deliver actionable insights and the foundational knowledge to explore advanced configurations. Whether you're new to real-time data or looking for a reliable integration tool, this guide demonstrates how Conduit makes real-time pipelines accessible to everyone.

This blog provides a comprehensive, beginner-friendly guide to building your first real-time data pipeline using Meroxa’s open-source Conduit platform. Designed to simplify real-time data integration, Conduit empowers developers to move data seamlessly between systems with minimal setup. The guide walks readers through the installation, initialization, pipeline configuration, and execution steps. Starting with a sample pipeline that streams generated data to a destination file, it showcases Conduit’s intuitive configuration process and highlights the flexibility to handle structured, scalable data in real time. By the end, readers will have a fully functioning pipeline ready to deliver actionable insights and the foundational knowledge to explore advanced configurations. Whether you're new to real-time data or looking for a reliable integration tool, this guide demonstrates how Conduit makes real-time pipelines accessible to everyone.

This blog highlights how integrating Meroxa for real-time data ingestion and Databricks for scalable processing transforms AI/ML workflows. This solution reduces data latency to under 30 seconds, accelerates model training from 48 to 6 hours, and boosts prediction accuracy by 23%. With automated pipelines and end-to-end integration, businesses save time, scale efficiently, and achieve tangible outcomes like improved customer engagement and increased revenue from real-time insights.

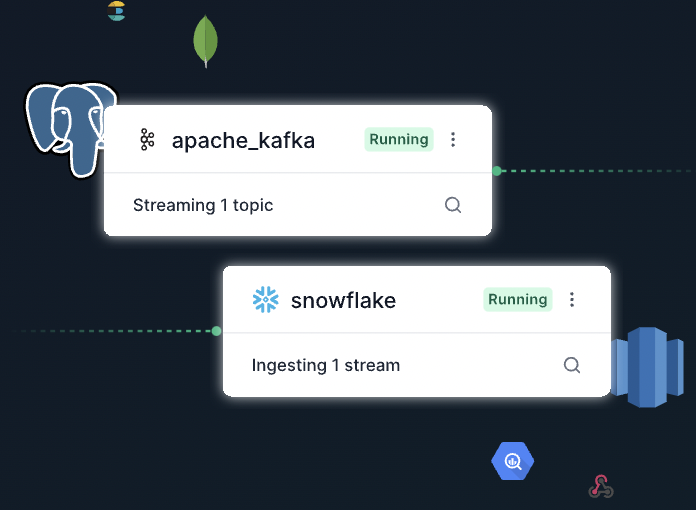

Discover how Meroxa revolutionized real-time data integration in 2024 with powerful new connectors, including Amazon DynamoDB, Snowflake, Apache Kafka, and more, enabling seamless data movement across diverse systems. This blog highlights key updates to the Conduit Platform, such as pipeline recovery features in Conduit v0.12.0 and schema registry support in Conduit Operator v0.0.2, designed to enhance resilience and simplify Kubernetes deployments. We also dive into a compelling case study featuring a global hotel network that leveraged Meroxa’s platform to integrate customer data, boosting guest satisfaction by 30% and increasing revenue per available room by 20%. Whether you're building robust data pipelines, enabling real-time analytics, or driving AI-powered transformations, explore how Meroxa’s latest advancements are unlocking new possibilities for developers and data teams.

Champagne Week embodies the creativity, dedication, and collaboration of the Meroxa team, showcasing innovative projects like enhanced documentation, real-time IoT processing, AI-powered summarization, and a collaborative demo that highlights our growth. Thank you to everyone who contributed to making this week a success—and to our users, who inspire us to keep raising the bar.

In today’s fast-paced AI landscape, relying on outdated, static datasets can lead to inaccurate models, costly retraining cycles, and delayed time-to-market for AI features. Real-time data pipelines offer a game-changing solution, enabling AI systems to stay accurate, scalable, and cost-effective by continuously learning from current conditions. With benefits like a 40% reduction in model hallucinations, faster deployment, and lower infrastructure costs, real-time data is essential for building reliable applications such as fraud detection, recommendation engines, and Customer 360 profiles. Discover how Meroxa’s platform empowers organizations to implement real-time data pipelines and unlock the full potential of their AI initiatives.

The Hotels Network (THN) partnered with Meroxa to streamline data flow between their sales and support teams, overcoming siloed data and complex architecture challenges. Using Meroxa’s Conduit Platform, THN achieved a unified, real-time pipeline from Salesforce to Redpanda, reducing operational costs by 30% and enhancing customer support capabilities. The solution’s scalability ensures THN can continue to grow and optimize operations seamlessly.

We’re excited to introduce the latest update to the **Conduit Operator**, now with built-in **schema registry support**. This new feature allows seamless data encoding and decoding, improving data compatibility across your pipelines. Whether you're managing multiple Conduit instances or scaling your data operations, schema registry integration ensures a smoother, more reliable experience for handling complex data flows.

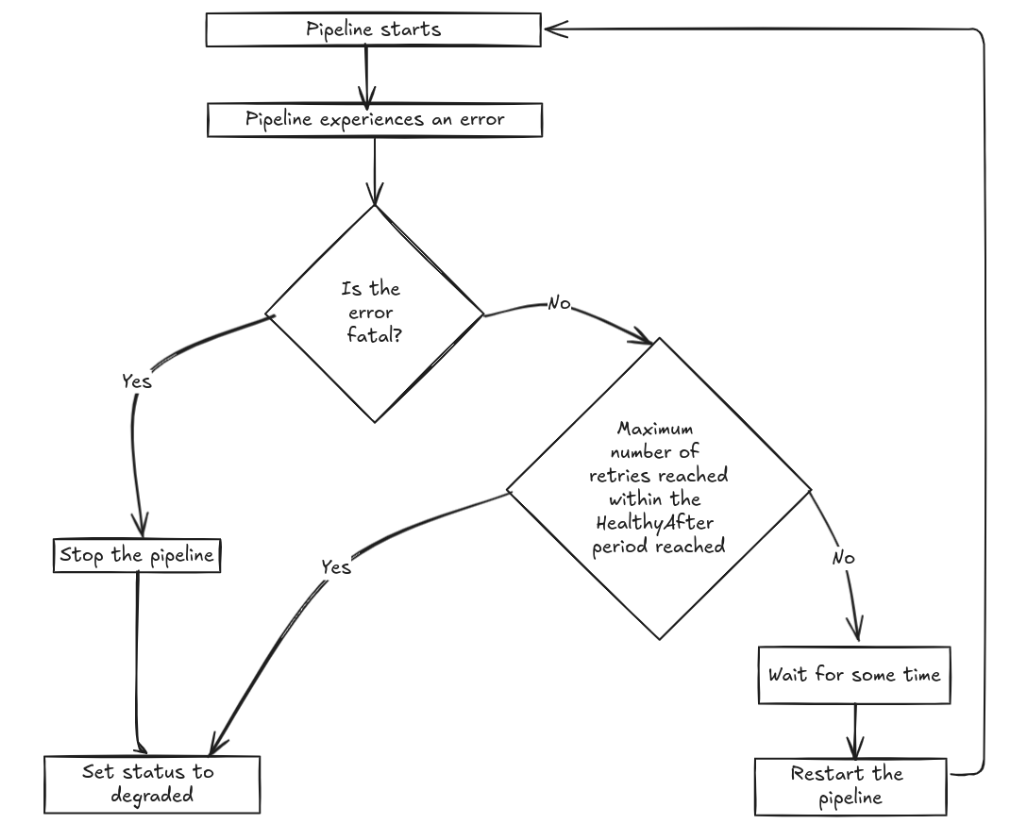

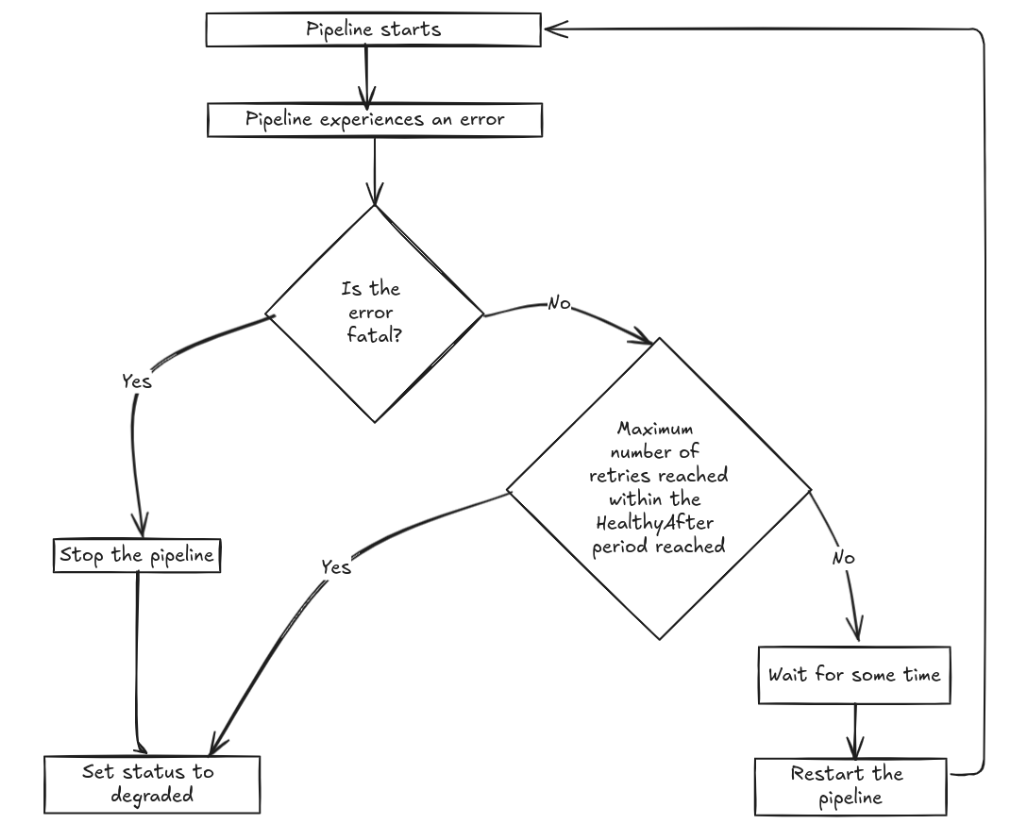

The Conduit team has just released Conduit v0.12, and we're gearing up for the launch of Conduit v1 with a focus on making pipelines more resilient. One key feature of this release is pipeline recovery, designed to automatically restart pipelines that experience temporary errors like network interruptions or service downtime.

With configurable backoff settings, Conduit can efficiently handle retries, reducing the impact of transient issues. Learn more about this feature and how it ensures your pipelines are always up and running.

We made it, Conduit v0.11 is here! In this latest release, we’ve focused on adding schema support, enabling you to detect schema changes and retain type information end-end.

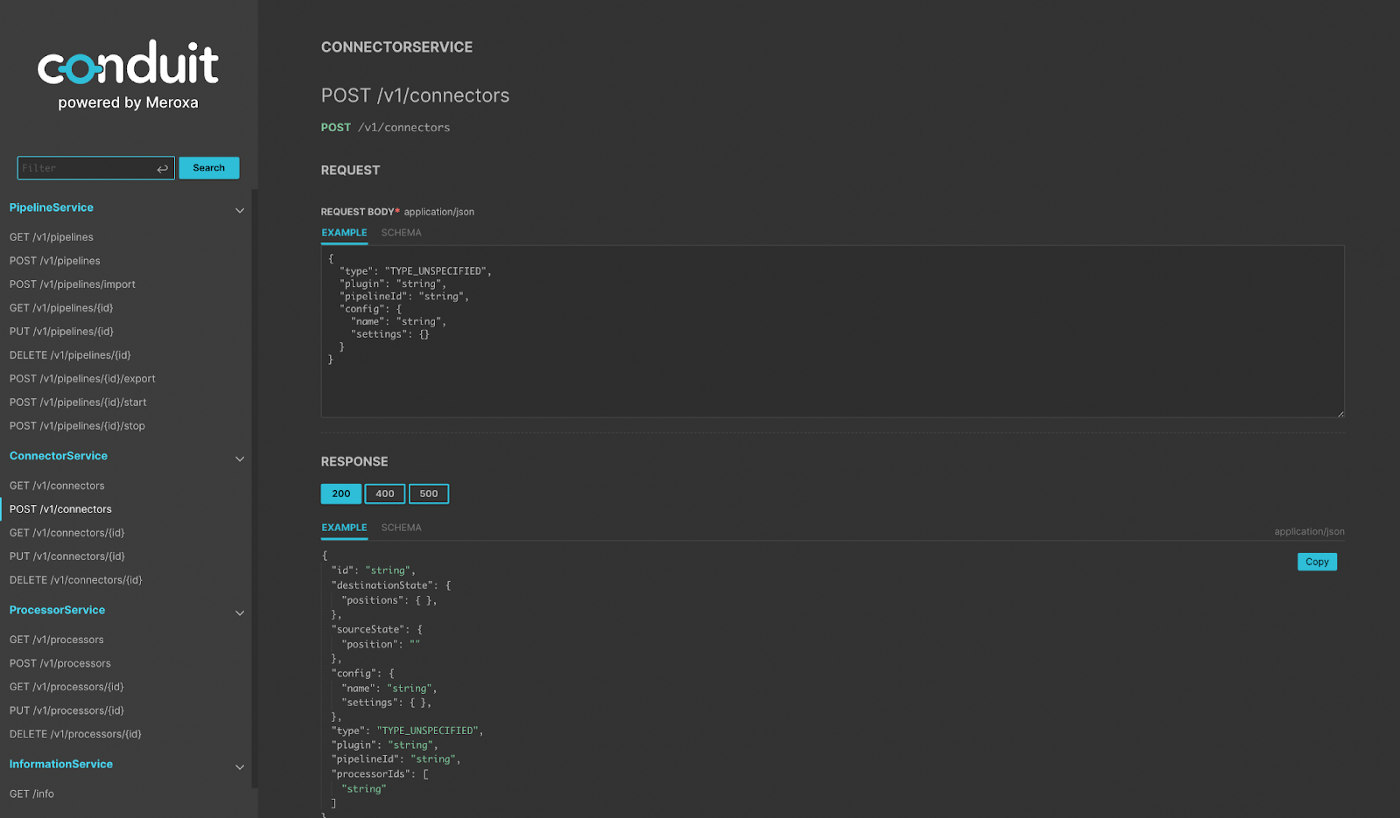

We are thrilled to introduce our latest offering, the Conduit Platform, which brings a host of new features and improvements designed to enhance your real-time data streaming experience, now powered by our robust Conduit open-source core. This transformation brings enhanced performance, scalability, and usability, coupled with access to over 100 connectors maintained by our dedicated open-source community. Here’s a closer look at what’s new and how it can benefit your data operations.

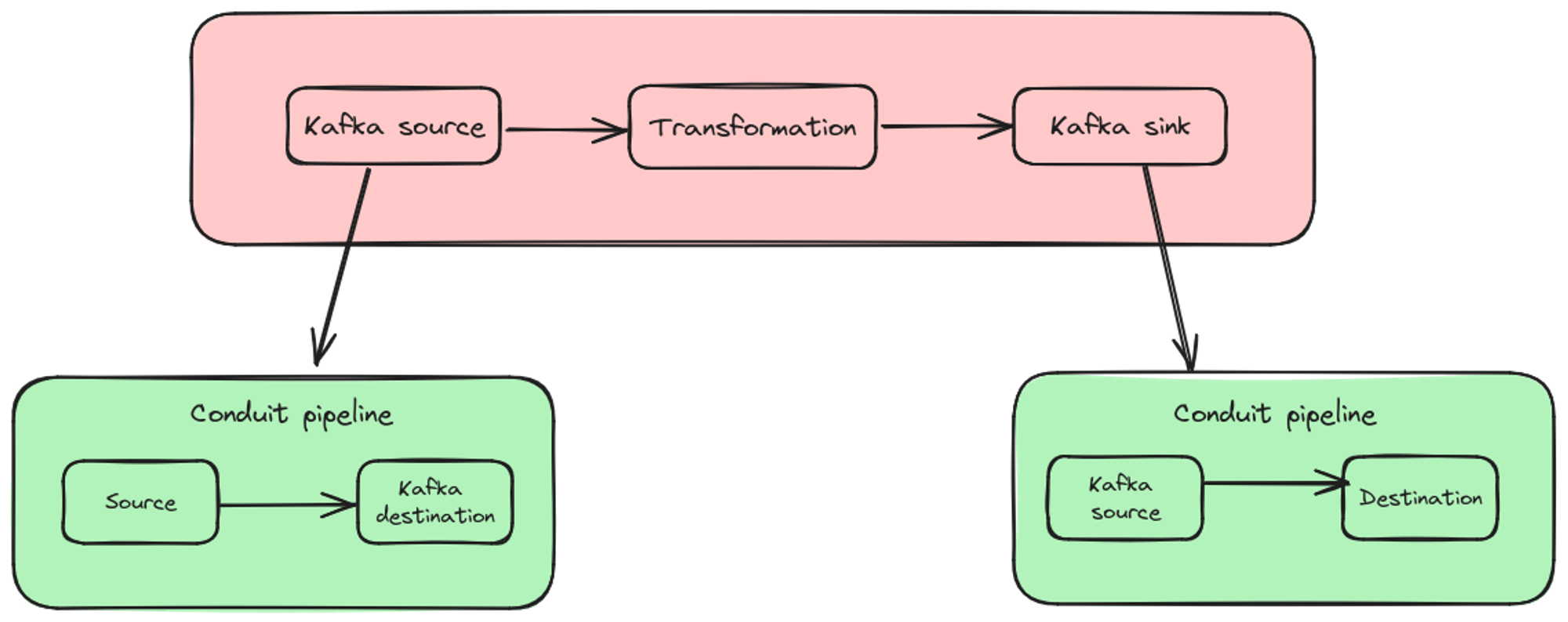

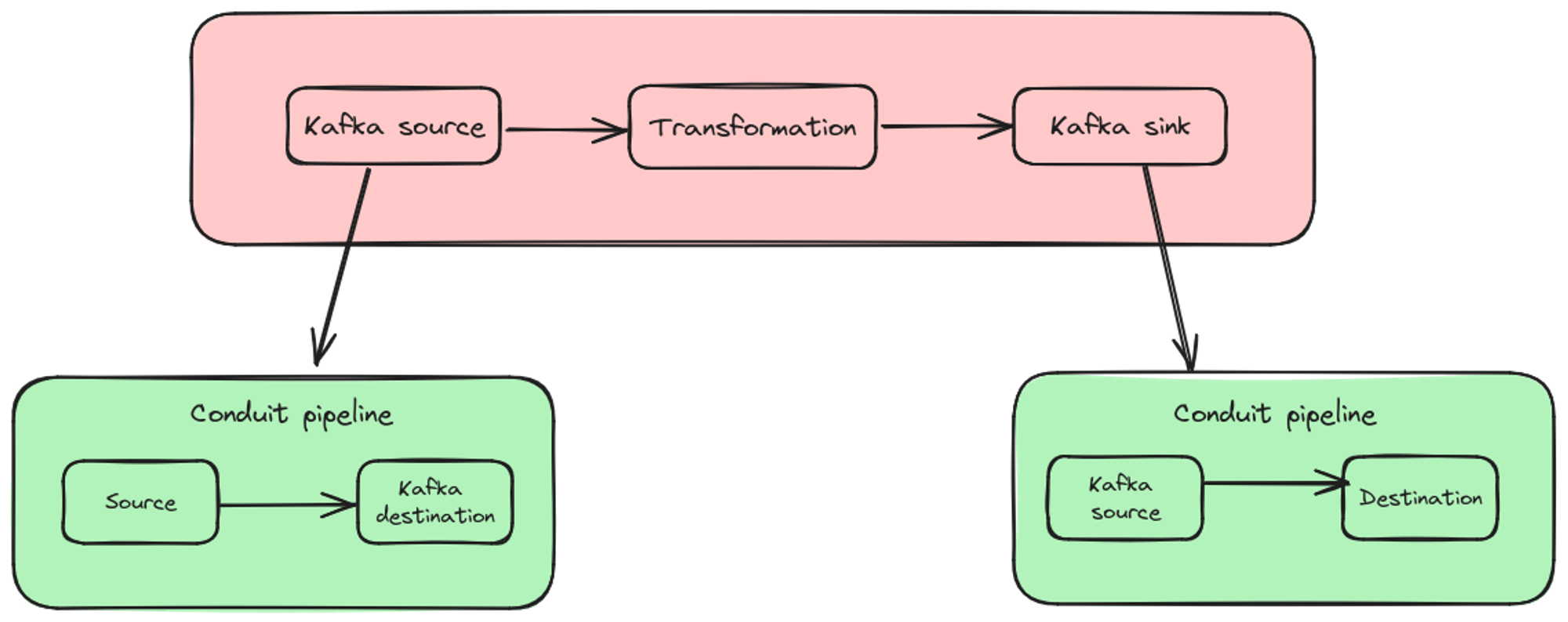

Conduit connector for Apache Flink, a powerful combination that significantly expands Flink’s capabilities. Apache Flink is renowned for its robust stream processing capabilities, while Conduit offers a lightweight and fast data streaming solution, simplifying the creation of connectors.

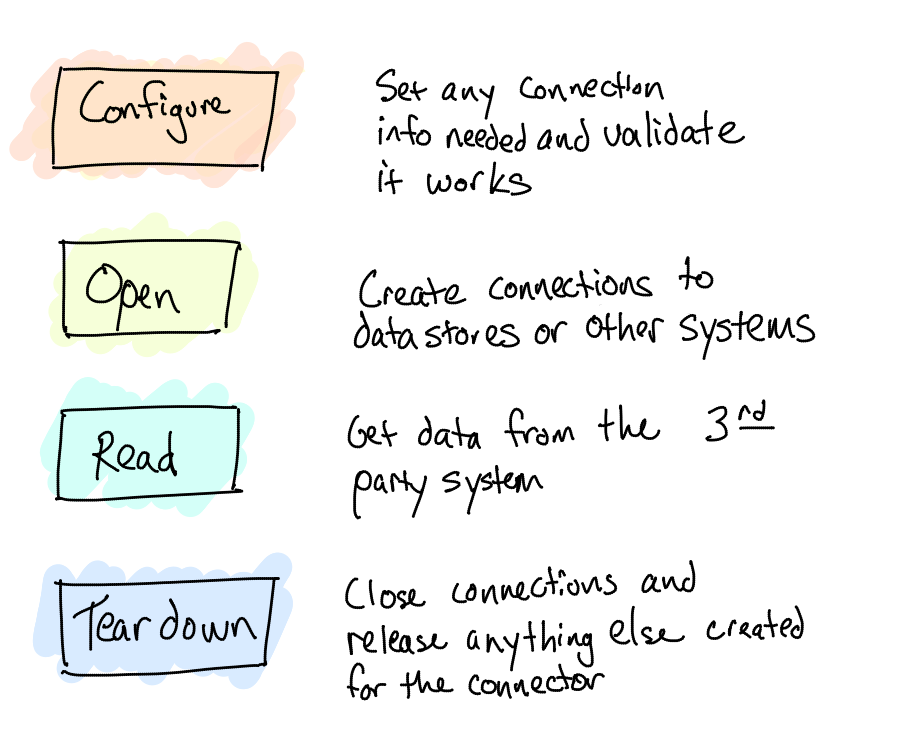

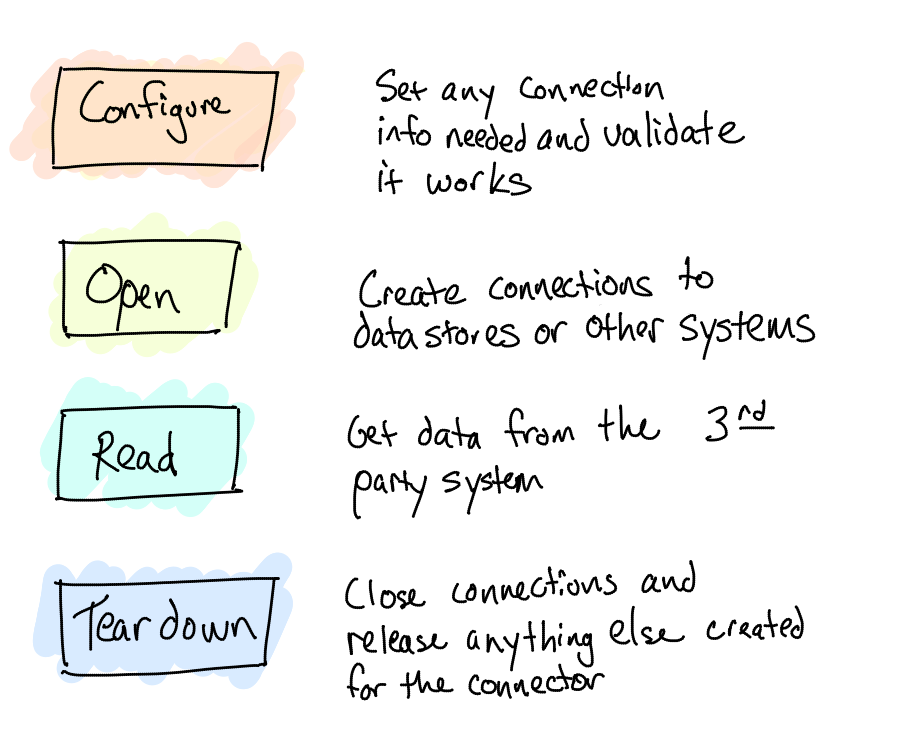

Explore the power of Conduit to create custom connectors tailored to your specific data integration needs. Learn how to use the Conduit SDK for enhanced data management and discover a world of possibilities in streamlining your data workflows. Start building your custom connector today!

Explore the new features and enhancements in Conduit version 0.10, designed to streamline your data integration processes. Discover how our latest update can help improve efficiency, security, and performance for your data operations. Upgrade today and transform how you manage data with Conduit 0.10

Discover the excitement of Hackweek! Dive into our latest blog post to explore innovative projects and creative breakthroughs from our most recent Hackweek. Learn how teams collaborate to turn bold ideas into reality, fostering a culture of innovation. Perfect for tech enthusiasts and creative thinkers alike!

Discover the revolutionary Conduit 0.9 update, enhancing data processing with standalone processors and advanced capabilities for seamless manipulation and efficiency. Explore now.

Conduit 0.8 more than doubles single-pipeline performance.

Revamp your data pipelines with Conduit and Redpanda! Swap Kafka and Kafka Connect complexities for a swift, user-friendly options.

Visit conduit.io to download and learn how to use Conduit, the secure and efficient open-source data integration tool accredited by the DoD Iron Bank.

Explore batching in Conduit connectors for improved data pipeline performance. Understand how it boosts throughput and scalability.

Conduit 0.7 gets us one step closer to being a full functioning, feature rich alternative to Kafka Connect.

Learn how OpenAI's GPT-4 has helped to streamline data connector building for Meroxa, reducing development time and effort.

Conduit 0.5: We made an easy-to-configure Dead Letter Queues (DLQ) through HTTP & gRPC, extending health checking, & adding capabilities with Debezium records.

Conduit is a tool to help developers build streaming data pipelines between production data stores and messaging systems.

We decided to build a performance benchmark for Conduit early, so we could determine how much it can handle and what it takes to break it.

Connector Middleware improves the developer experience. You can utilize middleware provided by the SDK to enrich the functionality of connectors without reinventing the wheel.

Conduit is a tool that helps developers move data within their infrastructure to the places they’re needed.

The opportunity to delight someone using your tool can happen at any time. The open-source Conduit project team makes the user experience a top priority.

Testing streaming systems and architectures can be difficult because you need to mock data and have an upstream system continuously push that mock data. Conduit has made it easier with a built in generator that creates fake data for streaming systems..

We faced challenges with Protobuf, so we began looking for a resolution... enter Buf!

In this release, Conduit now has an official SDK that will allow developers to build connectors for any data store.

Since Conduit ships as a tiny single binary, it functions as a powerful tool that allows you to efficiently move data from one place to another.

Conduit is a tool to move data around and Heroku is an application platform.

The world is trending towards more rapid delivery of goods and services. We use the term “Real-time” to mean that it happens as close to “now�” as possible.

Conduit is an open-source project to make real-time data integration easier for developers and operators.

The Future of the Modern Data Stack looks excellent for data engineers. But where is the modern data stack for software engineers?

We’re open-sourcing Conduit, Meroxa’s data integration tool built to be flexible & extendible, and provide developer-friendly streaming data orchestration.